Cardano ITN staking pool FAQ

Contents

- 1 What's the best practice for maintaining a running pool?

- 2 Is there a way to improve bootstrapping?

- 3 My node created a block, why's it not in the chain?

- 4 Why doesn't my node create some of it's blocks?

- 5 How many peer connections are actually established?

- 6 Why's my node having so many "height battles"?

- 7 Do you miss out on the leader elections if your node is offline at the start of the epoch?

- 8 Are all storage directories the same?

- 9 How can I send funds from my pool's rewards account?

- 10 Where can I get the data for block zero?

- 11 See also

What's the best practice for maintaining a running pool?

Pools currently require a lot of babysitting, this should be something that will improve a lot by the time we get to the mainnet, but currently the node will require a lot of attention. Most operators have their own custom scripts to take as much of the headache out of it as possible. We have a script called the sentinel.pl to take care of things for us, this script is customised for our own needs, but there are links to other scripts on that page. This will do things like check if the node is stuck and restart it if so, if it can't get past the bootstrap period after a few goes it will restart it again using the previous day's storage directory, sends a report at the end of each epoch and more.

Is there a way to improve bootstrapping?

The bootstrap phase is the main hold up (unless you also need to download the genesis block and start from scratch). It's to restart if the bootstrapping phase doesn't complete in say ten minutes. Choosing a good set of peers to boot from is the main obstacle, adapools.org/peers has a list of the currently responsive IOHK peers, but these are usually all pretty bigged down, Michael Fazio maintains another good list here which tends to work better, and there's another list here which I haven't tried.

If your node was pretty close to the tip when it was stopped, and it's only been stopped for a minute or so, then you can actually start it with no entries in the trusted peers list and you'll begin getting connections without the need for going through the bootstrap phase at all (you can also skip bootstrapping by setting max_bootstrap_attempts to 0). I have a --quick option in my cardano-update-conf.pl script that removes all trusted peers for this purpose.

Make sure your ulimit is 4096 or higher:

ulimit -n 4096You can try forcing bootstrapping to jump to the next peer with:

sudo netstat -alnp|grep jormungandr

sudo ss -K dst Peer_IPMy node created a block, why's it not in the chain?

Competitive forks: Sometimes pools create blocks that exist for a short time and then disappear. This is a natural aspect of the network because slots often have more than one leader which results a forking situation called a "slot battle". Competitive forks can also result from different nodes assigned to very close slots both creating their block from the same parent block which is called a "height battle", or by creating a block on a fork that the majority of the network is not on. Slot battles are resolved immediately, but in the case of a height battle, your pool's block may appear to be in the chain and then suddenly disappear some minutes later as it can take a few blocks to resolve. It's natural to have some height battles, but regular height battles point to a lagging or badly connected node, see below.

Being better connected, producing blocks quickly and having accurate system time all help your chances in competitive forks. Setting up chrony properly so you have very accurate time will help your chances a lot, and having a higher max_connections setting can help too - see our sample config for where to add this setting.

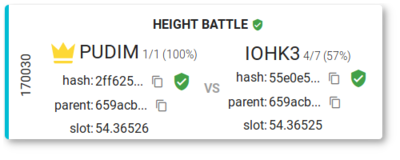

PoolTool has a great new feature showing the winners and losers of all the recent "competitive forks" at pooltool.io/competitive. Here on the left is an example of our node PUDIM winning a "height battle", on the right is what happens when a few nodes are involved and it's unclear what kind of battle it is :-)

Adverserial forks: There is also a problem in the network where some nodes create many forks on purpose so that other nodes will more often get themselves onto the wrong forks. These are called adverserial forks, and give the creator an advantage. You can see a list of adverserial forks on the pooltool.io/health page, a few pools such as the LION pools are renowned for creating huge numbers of adverserial forks and should be shamed and boycotted for this antisocial behaviour. Most of the other pools creating adverserial forks are mistakes by operators who run clusters and mess up with their leader selection process.

Low CPU resource: If the CPU is maxed-out then that will also cause blocks to be produced too slowly. Reducing the max_connections setting reduces CPU usage, but there also seems to be issues with some choices of VPS that cause high CPU usage with Jormungandr (especially since the 0.8.6 release), most likely differences in the IO backend are responsible for this. Changing from a Linode to a Digital Ocean "Droplet" made a huge difference for us.

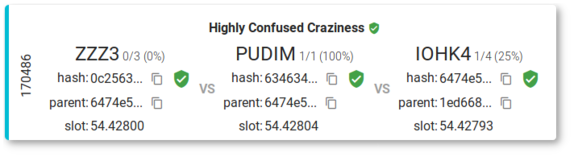

On the left is the CPU usage of the Linode going almost continuously running at 100% even with the max_connections setting at the extremely conservative default value of 256. The sharp drops you see are when the node gets stuck and is restarted by the sentinel which happens very frequently. The Linode costs $20/mo, has 4GB of RAM and two Xeon E5-2697v4 CPUs running at 2.3GHz with a cache size of 16MB.

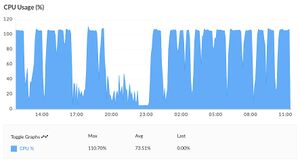

On the right is the CPU graph for the new Droplet, also $20/mo with 4GB of RAM and two CPUs at the same speed of 2.3GHz, but slightly more powerful Xeon Gold 6140 CPUs with 24MB of cache. As you can see the difference is startling and cannot be attributed only to the slightly better CPU. After 12 hours of operation it settles down to around 20% average CPU usage even with double the max_connections setting compared to the Linode. The glitches you can see are from restarts when we changed the max_connections setting from 256 to 1024 which resulted in some peaks of around 90% (too short to appear on the graph), so we then settled on 512.

Issue#1599 was recently raised about the problem of the CPU usage creeping up for many people causing the nodes to get out of sync.

Why doesn't my node create some of it's blocks?

Sometimes you see your slot come and go and your pool didn't even attempt to create a block at all, and nobody else did either! In this case it's likely because your server's system time is off, so check your chrony configuration. If this is the case you'll see the following errors in your log at the times you should have been creating a block:

Eek... Too late, we missed an event schedule, system time might be off?This will be associated with a rejected block that looks like this in the leaders log:

scheduled_at_date: "39.41535"

scheduled_at_time: "2020-01-22T18:18:07+00:00"

status:

Rejected:

reason: Missed the deadline to compute the scheduleHow many peer connections are actually established?

Setting max_connections is one thing, but to know how many are actually established is another.

netstat -an | grep ESTABLISHED | wc -l./jcli rest v0 network stats get -h http://localhost:3100/api | grep addr | wc -lnetstat -tn | tail -n +3 | awk "{ print \$6 }" | sort | uniq -c | sort -nWhy's my node having so many "height battles"?

"Slot battles" are a natural part of the protocol and occur when more than one leader is assigned to the same slot, the battle is inevitable and someone will come out a winner. But height battles (at least being on the loosing side of them) are a sign that your node is lagging behind or is not well connected, ideally in a healthy network height battles should be very rare. Check if you're up to tip and how many established connections you have. Increasing max_connections will allow you to be more widely connected, but both will also consume more resources. See the section above about checking your actual number of established connections, ideally you should have at least 500 to avoid regular height battles.

1 SYN_RECV

32 SYN_SENT

82 TIME_WAIT

714 ESTABLISHEDLowering your max_unreachable_nodes_to_connect_per_event down to a very low value like 5 or even 2 is a good idea if you're suffering from many height battles too. This is the number of connections your peer is permitted to make with nodes that are not set up to receive incoming connections which are mainly wallets, your node may be flooded with wallet connections that are preventing it from being well connected to the network of pools.

Also it' not only about having a high number of peer connections, but also having a high quality selection of peers. One problem with the mainline releases so far is that they allow unresponsive peers to stay in the pool and continuously gossip them around the network. Michael Fazio's 0.8.9-stable fork attempts to resolve this problem since the alpha5 release by quarantining bad peers for longer and longer every time the same peer is retied and fails. This results in a very high quarantine count and a better and better selection of quality peers as time goes on and can really help with height battles. It needs a few hours of uptime before the peer selection is really good though.

"peerAvailableCnt": 10002,

"peerQuarantinedCnt": 53917,

"peerTotalCnt": 64505,

"peerUnreachableCnt": 586,

"state": "Running",

"txRecvCnt": 609,

"uptime": 23229,Do you miss out on the leader elections if your node is offline at the start of the epoch?

No. The elections are a deterministic pseudo-random process which doesn't require communication between nodes to organise, so it's possible for a node to be disconnected at the start of the epoch and still know its leader schedule when it comes back online after the epoch as started. Note that although the leader schedules (including who would lead a slot if the primary choice was a no show, or the next choice was a no show as well etc) are deterministic, the process is based on a seed which is derived from the hashes of the blocks of the previous epoch, and is therefore impossible to know before that block is complete.

As evidence of the fact that a node doesn't need to be present at the start of an epoch in order to participate in the block creation within it, you can see below a terrible start to epoch 27 by my node where it couldn't get out of the bootstrapping phase for over an hour during which time the transition from epoch 26 to 27 took place. But yet the leader schedule is still populated, and blocks in that epoch including the first one at 27.4386 were successfully created.

[1578596306/180] Epoch:26 Slot:42744 Block:84910-12 Hash:14c232d74bbfcb4b86221bde4ebf02cad5ad78b039460030019578fd873d16e3 Tax:98595022 Stake:27038332991274

[1578596711/405] Stuck on 26.42744, restarting node...

[1578596716/000] Node is not running, starting now...

[1578596723/403] Bootstrapping

. . .

[1578602533/353] Bootstrapping

[1578602891/000] Epoch:27 Slot:2663 Block:85109-6 Hash:1cfeadb6c4ba0afcbd04173fb35862c53a2f1ddfa0b8846ddd2d7d2291274160 Tax:118954432 Stake:27046455059377

[1578602941/050] Epoch:27 Slot:2807 Block:85115-0 Hash:d747df3c0becbd8efb023416d723d6190d7d45ae295bdbc6ce0fa8bd94ad34f1 Tax:118954432 Stake:27046455059377

[1578603001/060] Epoch:27 Slot:2865 Block:85116-0 Hash:7fbb072402c7dbe126ed53987fc78b2657cbb4568be6f9ce2ffc4fb143159d1a Tax:118954432 Stake:27046455059377./jcli rest v0 leaders logs get --host "http://127.0.0.1:3100/api" | grep date

scheduled_at_date: "27.4386"

scheduled_at_date: "27.8080"

scheduled_at_date: "27.34182"

scheduled_at_date: "27.42107"

scheduled_at_date: "27.35573"

scheduled_at_date: "27.15571"

scheduled_at_date: "27.18500"

scheduled_at_date: "27.19565"

scheduled_at_date: "27.33988"

scheduled_at_date: "27.29712"Are all storage directories the same?

All the storage directories are compatible, so if your node is stuck, can't bootstrap, or can't retrieve the genesis block, you can use someone else's storage directory, or even use your own one from your local Daedalus node. The storage directory has up to three files in it, but only the single .sqlite file is required to back up a node's storage.

But even though different storages are all compatible, they're actually not unique, because the storage contains all the blocks that the node ever received or created, even those that were removed from the chain after being re-organised due to forks.

Here's some evidence to show that it works like this: someone in the Telegram channel asked if anyone else could see the block he created, I checked the hash which started with 30c519 and my pool node recognised it, yet it didn't exist in some other nodes including my own local Daedalus node. I extracted his pool ID from the block data and then listed all the blocks created in that epoch by the pool according to my pool node, and sure enough that 30c519 did not show up. Here's what I did:

$ block=`./jcli rest v0 block 30c519c55c12e6ed55639bde6d3b3e97e947b7f40aeeb15e7d5ae4b3ac164450 get --host "http://127.0.0.1:3100/api"`

$ tools/crypto/cardano-pool-blocks-list.pl --pool=`echo ${block:168:64}` --explorer=http://localhost:3100/explorer/graphql --epoch=49

Total blocks created: 844

1 Date:49.10247 Height:151324 Hash:3c2dd31f5267c513f103a834c65e07d0a92876d800ce31b285ee1a8841a2b57f

2 Date:49.9987 Height:151310 Hash:c5eaa1519376fef0a408c1c1039b70a7c803defe9e17a082564fcc272b4b5219

3 Date:49.9010 Height:151241 Hash:6e8c1718cf794c26a4075a93c6a5568b3910b5806f09f7e7fd74fb86a74adf3f

4 Date:49.4343 Height:150860 Hash:20991eac6975e6578d72558b426f4febef1ab2a5fb7a2fb5e893364cbcc7723e

5 Date:49.4113 Height:150838 Hash:2115cf40c80605048d12f9fc2382f3af381d0a7bea9015e1e216a470bc7b9742

6 Date:49.2184 Height:150673 Hash:55b115c90f6067d217cfacda5192bc2c21f9d33753996c17252a06c8b6804674

7 Date:49.1551 Height:150604 Hash:5635722da0968e2d74991ac478dbe16fbe46fee7ddb487a82159ede44c13af9a

8 Date:49.1512 Height:150598 Hash:af825b898c8436b793c0a29b9e2937452d256615bfb441c910ca112e82ee7db0

9 Date:49.1231 Height:150580 Hash:4171ae1ba5a7aef433d4c4f61a63e31a95a6336769255795b259eb4e917ce922

10 Date:49.571 Height:150535 Hash:0fcb0937e0223221d161bb2fb8275305db157dd6c21e636af7d938b75530246cAs can be seen, when I use my cardano-pool-blocks-list.pl script to ask my node for all list the blocks created by the pool that created the 30c519 block, that block does not show up. This is interesting as it shows that a node continues to have knowledge of hashes even after that are discarded from the chain after a fork is resolved. What's more interesting is that even after a node restart, 30c519 is still queryable which means that their held in the storage. This means that everyone's storage is unique and holds information about the forks it's experienced, and also that storage can be slimmed down a lot.

Using SQLite3' we can access the blocks.sqlite file from a storage directory to see how many orphaned blocks there are to estimate how much space they take up, and we could delete them too if we wanted to. When I ran this on a block table with 238,104 blocks in it, I found that 16,196 of the were redundant orphan blocks. The total file is 294M in size, so this equates to 6.8% of the storage, or about 20M.

sqlite3 blocks.sqlite "select count(hash) from BlockInfo where hash not in ( select parent from BlockInfo )"How can I send funds from my pool's rewards account?

The rewards are sent to the pledge address you funded your pool with, but due to a bug in the Shelley explorer which means it can only account for UTXO transactions, but the rewards update the balance directly without a UTXO. This also explains why some people have been able to see negative balances show in Shelley. You can use the IOHK send-money.sh script to send money from your pool's address, which takes four parameters, the recipient address, the amount in lovelace, your pool's REST port and your account's private key which will be in the owner.prv file if you used the set up procedure described in this site. You'll probably want to check your balance first:

./jcli rest v0 account get `cat owner.addr` -h http://localhost:3100/api

./send-money.sh addr1... 1000000 3100 `cat owner.prv`- Note1: this script is designed to send funds to other account type addresses and will display the balance of the recipient account at the end. It works fine sending to normal balance addresses too such as those in your Daedalus wallet, but the final balance check will throw an error in this case.

- Note2: funds from rewards addresses can take some time to update, it might be that it doesn't show until the next epoch but I'm not sure about these details yet. I have comfirmed that rewards can indeed be sent to a Daedalus account.

Where can I get the data for block zero?

Since Jormungandr v0.8.11 there is a new http_fetch_block0_service configuration option that allows a new node (or one that needs to rebuild its storage) to retrieve the 27MB genesis block content over HTTP instead of having to find a trusted peer that can deliver it over P2P. IOHK have block zero available here, but this may become very sluggish if many peers are hitting it. You should download it now so you have the data available if you need it any time, but there are also three easy ways to generate the data yourself from a functioning Jormungandr.

First you can get it from jcli as follows:

jcli rest v0 block "8e4d2a343f3dcf9330ad9035b3e8d168e6728904262f2c434a4f8f934ec7b676" get --host "http://localhost:3100/api" | xxd -r -p > block0.bin

You need to pipe it through the xxd command because the jcli output is hex encoded binary, but the file needs to be raw binary. If you query the REST interface directly you don't need to pipe it through xxd, e.g.

curl "http://localhost:3100/api/v0/block/8e4d2a343f3dcf9330ad9035b3e8d168e6728904262f2c434a4f8f934ec7b676" > block0.bin

If you don't have a running node, but you do have access to a blocks.sqlite file, you can extract the block zero binary with an SQLite query on the file (you need to install the sqlite3 package to do this):

sqlite3 blocks.sqlite "select writefile('block0.bin', block) from Blocks where lower(hex(hash)) = '8e4d2a343f3dcf9330ad9035b3e8d168e6728904262f2c434a4f8f934ec7b676'"

Finally, it's important to know how to verify that any file you generate or download has the correct hash that matches the genesis hash, which you can do with jcli as follows:

jcli genesis hash --input block0.binSee also

- Set up a Cardano staking pool - setting up on the mainnet

- Our Cardano Sentinel

- Cardano