Difference between revisions of "Set up a new bot job"

m (→Example init function) |

(Change source-code blocks to standard format) |

||

| (10 intermediate revisions by one other user not shown) | |||

| Line 19: | Line 19: | ||

*'''$$::job{'id'}''' the daemon will set this to a GUID when a new job is started | *'''$$::job{'id'}''' the daemon will set this to a GUID when a new job is started | ||

*'''$$::job{'type'}''' the daemon sets this when the job is started | *'''$$::job{'type'}''' the daemon sets this when the job is started | ||

| + | *'''$$::job{'wiki'}''' the daemon sets this when the job is started to the ''$::script'' value if set, or the ''$::wiki'' value if not | ||

*'''$$::job{'user'}''' the daemon sets this to the wiki user it will interact with the wiki through | *'''$$::job{'user'}''' the daemon sets this to the wiki user it will interact with the wiki through | ||

*'''$$::job{'start'}''' the daemon sets this to the UNIX time stamp when the job is started | *'''$$::job{'start'}''' the daemon sets this to the UNIX time stamp when the job is started | ||

| Line 31: | Line 32: | ||

=== Example init function === | === Example init function === | ||

A typical ''init'' function for a job would be something like the following example. It reads in the file from the location provided in the job's parameters, extracts the useful information out of the file ready for processing, and stops the job if there are any problems with that. Note that for very large files, it would be better to read the lines of the input file one at a time in the ''main'' function instead of pre-processing the whole things like this. | A typical ''init'' function for a job would be something like the following example. It reads in the file from the location provided in the job's parameters, extracts the useful information out of the file ready for processing, and stops the job if there are any problems with that. Note that for very large files, it would be better to read the lines of the input file one at a time in the ''main'' function instead of pre-processing the whole things like this. | ||

| − | + | <source lang="perl"> | |

sub initFoo { | sub initFoo { | ||

| Line 65: | Line 66: | ||

1; | 1; | ||

} | } | ||

| − | </ | + | </source> |

=== Example main function === | === Example main function === | ||

| − | + | <source lang="perl"> | |

sub mainFoo { | sub mainFoo { | ||

| Line 92: | Line 93: | ||

1; | 1; | ||

} | } | ||

| − | </ | + | </source> |

=== Example stop function === | === Example stop function === | ||

Stop functions are only required if the job exhibits persistent database connections or file handles. It might look something like the following example. | Stop functions are only required if the job exhibits persistent database connections or file handles. It might look something like the following example. | ||

| − | + | <source lang="perl"> | |

sub stopFoo { | sub stopFoo { | ||

$$::job{'handle'}->close(); | $$::job{'handle'}->close(); | ||

1; | 1; | ||

} | } | ||

| − | </ | + | </source> |

== Creating jobs to be started from the special page == | == Creating jobs to be started from the special page == | ||

| Line 108: | Line 109: | ||

=== Supplying parameters to jobs === | === Supplying parameters to jobs === | ||

Some jobs require some specific parameters to be set before they can run, for example import jobs require a file to be uploaded or a previously uploaded file to be selected. To create a new job which requires such parameters to be filled in first two additional functions must be written. These functions are MediaWiki hooks written in PHP. The hook names depends on the job name, for example the following hooks may be added to the ''LocalSettings.php'' file of the wiki to provide a form for our previous "Foo" job: | Some jobs require some specific parameters to be set before they can run, for example import jobs require a file to be uploaded or a previously uploaded file to be selected. To create a new job which requires such parameters to be filled in first two additional functions must be written. These functions are MediaWiki hooks written in PHP. The hook names depends on the job name, for example the following hooks may be added to the ''LocalSettings.php'' file of the wiki to provide a form for our previous "Foo" job: | ||

| − | + | <source lang="perl"> | |

$wgHooks['WikidAdminTypeFormRender_Foo'][] = "wfRenderFooForm"; | $wgHooks['WikidAdminTypeFormRender_Foo'][] = "wfRenderFooForm"; | ||

$wgHooks['WikidAdminTypeFormProcess_Foo'][] = "wfProcessFooForm"; | $wgHooks['WikidAdminTypeFormProcess_Foo'][] = "wfProcessFooForm"; | ||

| − | </ | + | </source> |

==== Form rendering hook ==== | ==== Form rendering hook ==== | ||

Here's a typical function definition for the form rendering hook, all that's required here is to set the passed ''$html'' variable with the HTML content of the form. In this example we're providing an upload button and a list of files that have been previously uploaded, the files are stored in the internal location specified in ''$wgFooDataDir''. | Here's a typical function definition for the form rendering hook, all that's required here is to set the passed ''$html'' variable with the HTML content of the form. In this example we're providing an upload button and a list of files that have been previously uploaded, the files are stored in the internal location specified in ''$wgFooDataDir''. | ||

| − | + | <source lang="php"> | |

function onWikidAdminTypeFormRender_Foo( &$html ) { | function onWikidAdminTypeFormRender_Foo( &$html ) { | ||

global $wgFooDataDir; | global $wgFooDataDir; | ||

| Line 123: | Line 124: | ||

return true; | return true; | ||

} | } | ||

| − | </ | + | </source> |

==== Form processing hook ==== | ==== Form processing hook ==== | ||

And here's the corresponding processing hook function which saves a file into the internal data location (''$wgFooDataDir'') if one has been uploaded with the form, and starts a job if a valid file has been specified from the list or uploaded. The '''$job''' array is empty on entry and should be filled with data to be made available to the executing job (in the global '''%::job''' hash). The '''$start''' variable is used to specify whether or not the job should run, this allows the processing function to abort the job if there's a problem with supplied data. | And here's the corresponding processing hook function which saves a file into the internal data location (''$wgFooDataDir'') if one has been uploaded with the form, and starts a job if a valid file has been specified from the list or uploaded. The '''$job''' array is empty on entry and should be filled with data to be made available to the executing job (in the global '''%::job''' hash). The '''$start''' variable is used to specify whether or not the job should run, this allows the processing function to abort the job if there's a problem with supplied data. | ||

| − | + | <source lang="php"> | |

function onWikidAdminTypeFormProcess_Foo( &$job, &$start ) { | function onWikidAdminTypeFormProcess_Foo( &$job, &$start ) { | ||

global $wgRequest, $wgSiteNotice, $wgFooDataDir; | global $wgRequest, $wgSiteNotice, $wgFooDataDir; | ||

| Line 145: | Line 146: | ||

return true; | return true; | ||

} | } | ||

| − | </ | + | </source> |

== Creating event-driven jobs == | == Creating event-driven jobs == | ||

Jobs may be started manually from the WikidAdmin special page, or by various events in the wiki or the Perl environment. To handle wiki events simply define an event handler function for the wiki hook name, this will override any default handler supplied by the daemon. In the following example code, the "Foo" job is started when an article in the "Bar" namespace is edited. | Jobs may be started manually from the WikidAdmin special page, or by various events in the wiki or the Perl environment. To handle wiki events simply define an event handler function for the wiki hook name, this will override any default handler supplied by the daemon. In the following example code, the "Foo" job is started when an article in the "Bar" namespace is edited. | ||

| − | + | <source lang="perl"> | |

sub onRevisionInsertComplete { | sub onRevisionInsertComplete { | ||

my %revision = %{$$::data{'args'}[0]}; | my %revision = %{$$::data{'args'}[0]}; | ||

| Line 157: | Line 158: | ||

workStartJob( "Foo" ) if $page and $user and $title =~ /^Bar:/ ; | workStartJob( "Foo" ) if $page and $user and $title =~ /^Bar:/ ; | ||

} | } | ||

| − | </perl>}} | + | </source> |

| + | |||

| + | == File access == | ||

| + | File access is made slightly complicated by the need to work without requiring the files to remain open, since the operation must be able to continue after program or machine reboot. Usually the files being accessed are text files and we need to read a specific line from the file. Here are some examples of how the ''init'' and ''main'' can be set up to do this. | ||

| + | |||

| + | === Sequential access === | ||

| + | The simplest version is sequential access where in each iteration of ''main'' we want to read the next line in the source text file. | ||

| + | <source lang="perl"> | ||

| + | sub initFoo { | ||

| + | my $file = $$::job{'file'}; | ||

| + | my $lines = qx( wc -l "$file" ); | ||

| + | $$::job{'fptr'} = 0; | ||

| + | $$::job{'length'} = int( $lines ); | ||

| + | 1; | ||

| + | } | ||

| + | |||

| + | sub mainFoo { | ||

| + | my $file = $$::job{'file'}; | ||

| + | my $fptr = $$::job{'fptr'}; | ||

| + | if ( open INPUT, "<$file" ) { | ||

| + | seek INPUT, $fptr, 0; | ||

| + | |||

| + | process_a_line( <INPUT> ); | ||

| + | |||

| + | $$::job{'fptr'} = tell INPUT; | ||

| + | close INPUT; | ||

| + | } else { | ||

| + | workLogError( "Couldn't read input file \"$file\", job aborted!" ); | ||

| + | workStopJob(); | ||

| + | } | ||

| + | 1; | ||

| + | } | ||

| + | </source> | ||

| + | |||

| + | === Random access === | ||

| + | This example shows how to allow any line or lines in the file to be read during each ''main'' execution. This is not often required, but is difficult to do if needed and so is included here mainly for re-use purposes. In the ''init'', it scans the file and creates an index file of byte-offsets for all the lines in the source file. | ||

| + | <source lang="perl"> | ||

| + | sub initFoo { | ||

| + | my $file = $$::job{'file'}; | ||

| + | my $errors = 0; | ||

| + | |||

| + | # List the byte offsets of each line of the source file in an index file | ||

| + | my $offset = 0; | ||

| + | if ( open INPUT, "<$file" ) { | ||

| + | if ( open INDEX, "+>$file.idx" ) { | ||

| + | while ( <INPUT> ) { | ||

| + | print INDEX pack 'N', $offset; | ||

| + | $offset = tell INPUT; | ||

| + | $$::job{'length'}++; | ||

| + | } | ||

| + | close INDEX; | ||

| + | } else { $errors++ && workLogError( "Couldn't create and index file \"$file.idx\"" ) } | ||

| + | close INPUT; | ||

| + | } else { $errors++ && workLogError( "Couldn't open input file \"$file\"" ) } | ||

| + | |||

| + | # Report errors and stop job if error count non zero | ||

| + | if ( $errors > 0 ) { | ||

| + | workLogError( "$errors errors were encountered, job aborted!" ); | ||

| + | workStopJob(); | ||

| + | } | ||

| + | |||

| + | 1; | ||

| + | } | ||

| + | |||

| + | sub mainFoo { | ||

| + | my $file = $$::job{'file'}; | ||

| + | my $line = $$::job{'line'}; # the line of the source text we want to read this iteration | ||

| + | |||

| + | # Use index file to obtain the byte-offset into the source file of the specified line | ||

| + | open INDEX, "<$file.idx"; | ||

| + | my $size = length pack 'N', 0; | ||

| + | seek INDEX, $size * $line, 0; | ||

| + | read INDEX, my $offset, $size; | ||

| + | $offset = unpack( 'N', $offset ); | ||

| + | close INDEX; | ||

| + | |||

| + | # Read the line in the source file starting at the offset | ||

| + | open INPUT, "<$file"; | ||

| + | seek INPUT, $offset, 0; | ||

| + | |||

| + | process_a_line( <INPUT> ); | ||

| + | |||

| + | close INPUT; | ||

| + | 1; | ||

| + | } | ||

| + | </source> | ||

| + | |||

| + | == Using the ImportCSV job type == | ||

| + | {{:ImportCSV}} | ||

Latest revision as of 18:11, 22 May 2015

| Set up a new bot job Organic Design procedure |

First ensure that you have a running wiki daemon (wikid) local to the server and wiki which will be used to administer the jobs. Set up the EventPipe extension and ensure that the daemon is receiving the events either by checking /var/www/tools/wikid.log or by configuring the bot to publish its notifications to an IRC channel. For more details on these aspects, see the install a new server and configure IRC procedures. After the robot framework and necessary extensions are installed you're ready to begin setting up a new job type which is described following.

Contents

Job Functions

First you will need to create some functions in Perl which will do the actual work. These can reside anywhere and are made available to the daemon by appending a require statement to /var/www/tools/wikid.conf.

There are three special functions which may be directly called by the daemon, one when an instance of the job type is first started, one which is called iteratively until the job is complete, and another after the job has completed so that any loose ends can be tied up such as closing database connections or file handles. These three functions are init, main and stop and are combined with the name of the job type when defining the functions, for example initFoo. Only the main is mandatory, the init and stop may not be necessary for some kinds of jobs.

Internal functions

In addition to the three special job functions, a job script may also define a number of special internal functions to be called by the other main functions when needed. These should follow the naming convention of jobFooBar (the "Bar" function used by functions in the "Foo" job) to avoid possible naming conflicts with other jobs or components.

The global job hash

When the daemon calls any of the three special job functions, it makes all of the data concerning the job they refer to available in the hash referred to by the $::job hashref. The job functions can store any data they like in this hash and it will be persistently available even if the daemon or server need to be restarted. Some of the keys in the job hash are used by the job execution framework as follows (the $$ is due to $::job being a reference to a hash rather than an actual hash).

- $$::job{'id'} the daemon will set this to a GUID when a new job is started

- $$::job{'type'} the daemon sets this when the job is started

- $$::job{'wiki'} the daemon sets this when the job is started to the $::script value if set, or the $::wiki value if not

- $$::job{'user'} the daemon sets this to the wiki user it will interact with the wiki through

- $$::job{'start'} the daemon sets this to the UNIX time stamp when the job is started

- $$::job{'paused'} a jobs main will not be called if its paused value is not empty

- $$::job{'finish'} the daemon sets this to the UNIX time stamp when the job finishes

- $$::job{'length'} the job's init function should calculate and set this if the number of iterations is able to be determined

- $$::job{'status'} this can be set by the job functions and is displayed in the WikidAdmin special page

- $$::job{'revisions'} if the jobs change wiki articles, this value should track the number of changes made

- $$::job{'errors'} any errors occurring during execution should be logged in this value

- $$::job{'wptr'} the daemon sets this to zero when the job starts and increments it on every execution of the job's main function. The job is automatically stopped when this value reaches the length value.

Example init function

A typical init function for a job would be something like the following example. It reads in the file from the location provided in the job's parameters, extracts the useful information out of the file ready for processing, and stops the job if there are any problems with that. Note that for very large files, it would be better to read the lines of the input file one at a time in the main function instead of pre-processing the whole things like this.

sub initFoo {

# Get the source file from the args supplied to the job (e.g. by the special page)

my $file = $$::job{'file'};

my $errors = 0;

# The data to import will be stored in this list

$$::job{'data'} = [];

# Loop through the lines in the source file

if ( open INPUT, '<', $file ) {

for ( <INPUT> ) {

# If this line of the file conforms to the pattern, store the data

push @{$$::job{'data'}}, \( $1, $2, $3 ) if /^(.+?),(.+?),(.+?)$/;

}

close INPUT;

} else {

workLogError( "Could not open input file '$file'!" );

$errors++;

}

# Stop job and log errors, or set the job length ready to start

if ( $errors ) {

workLogError( "$errors errors were encountered during preprocessing, job aborted" );

workStopJob();

} else {

$$::job{'length'} = 1 + $#{$$::job{'data'}};

}

1;

}Example main function

sub mainFoo {

# Get the current iteration in the job and the total iterations and update the status

my $ptr = $$::job{'wptr'};

my $len = $$::job{'length'};

$$::job{'status'} = "Processing row $ptr of $len";

# Read in the row that should be processed during this iteration

# (as determined by the job's wptr value)

my $row = $$::job{'data'}[$ptr];

# Remove the first item in the row and use it as the article title

my $title = shift @$row;

# Format the data into a wikitext template call

my $text = "{"."{Foo|" . join( "|", @$row ) . "}"."}";

# Update/create the article and increment the job's revisions value if content changed

my $cur = wikiRawPage( $::wiki, $title );

$$::job{'revisions'}++ if wikiEdit( $::wiki, $title, $text, "Content imported from $file" ) && $cur ne $text;

1;

}Example stop function

Stop functions are only required if the job exhibits persistent database connections or file handles. It might look something like the following example.

sub stopFoo {

$$::job{'handle'}->close();

1;

}Creating jobs to be started from the special page

The usual way to run a job is manually from the WikidAdmin special page. If its functions have been correctly defined and included by the daemon, then the job type will appear in the list in the special page. Simple select the job type and click the start button.

Supplying parameters to jobs

Some jobs require some specific parameters to be set before they can run, for example import jobs require a file to be uploaded or a previously uploaded file to be selected. To create a new job which requires such parameters to be filled in first two additional functions must be written. These functions are MediaWiki hooks written in PHP. The hook names depends on the job name, for example the following hooks may be added to the LocalSettings.php file of the wiki to provide a form for our previous "Foo" job:

$wgHooks['WikidAdminTypeFormRender_Foo'][] = "wfRenderFooForm";

$wgHooks['WikidAdminTypeFormProcess_Foo'][] = "wfProcessFooForm";Form rendering hook

Here's a typical function definition for the form rendering hook, all that's required here is to set the passed $html variable with the HTML content of the form. In this example we're providing an upload button and a list of files that have been previously uploaded, the files are stored in the internal location specified in $wgFooDataDir.

function onWikidAdminTypeFormRender_Foo( &$html ) {

global $wgFooDataDir;

$html = 'Use an existing file: <select name="file"><option />';

foreach ( glob( "$wgFooDataDir/*" ) as $file ) $html .= '<option>' . basename( $file ) . '</option>';

$html .= '</select><br />Or upload a new file:<br /><input name="upload" type="file" />';

return true;

}Form processing hook

And here's the corresponding processing hook function which saves a file into the internal data location ($wgFooDataDir) if one has been uploaded with the form, and starts a job if a valid file has been specified from the list or uploaded. The $job array is empty on entry and should be filled with data to be made available to the executing job (in the global %::job hash). The $start variable is used to specify whether or not the job should run, this allows the processing function to abort the job if there's a problem with supplied data.

function onWikidAdminTypeFormProcess_Foo( &$job, &$start ) {

global $wgRequest, $wgSiteNotice, $wgFooDataDir;

# Handle upload if one specified, otherwise use existing one (if specified)

if ( $target = basename( $_FILES['upload']['name'] ) ) {

$job['file'] = "$wgFooDataDir/$target";

if ( file_exists( $job['file'] ) ) unlink( $job['file'] );

if ( move_uploaded_file( $_FILES['upload']['tmp_name'], $job['file'] ) ) {

$wgSiteNotice = "<div class='successbox'>File \"$target\" uploaded successfully</div>";

} else $wgSiteNotice = "<div class='errorbox'>File \"$target\" was not uploaded for some reason :-(</div>";

} else $job['file'] = $wgFooDataDir . '/' . $wgRequest->getText('file');

# Start the job if a valid file was specified, error if not

if ( !$start = is_file( $job['file'] ) ) $wgSiteNotice = "<div class='errorbox'>No valid file specified, job not started!</div>";

return true;

}Creating event-driven jobs

Jobs may be started manually from the WikidAdmin special page, or by various events in the wiki or the Perl environment. To handle wiki events simply define an event handler function for the wiki hook name, this will override any default handler supplied by the daemon. In the following example code, the "Foo" job is started when an article in the "Bar" namespace is edited.

sub onRevisionInsertComplete {

my %revision = %{$$::data{'args'}[0]};

my $page = $revision{'mPage'};

my $user = $revision{'mUserText'};

my $title = $$::data{'REQUEST'}{'title'};

workStartJob( "Foo" ) if $page and $user and $title =~ /^Bar:/ ;

}File access

File access is made slightly complicated by the need to work without requiring the files to remain open, since the operation must be able to continue after program or machine reboot. Usually the files being accessed are text files and we need to read a specific line from the file. Here are some examples of how the init and main can be set up to do this.

Sequential access

The simplest version is sequential access where in each iteration of main we want to read the next line in the source text file.

sub initFoo {

my $file = $$::job{'file'};

my $lines = qx( wc -l "$file" );

$$::job{'fptr'} = 0;

$$::job{'length'} = int( $lines );

1;

}

sub mainFoo {

my $file = $$::job{'file'};

my $fptr = $$::job{'fptr'};

if ( open INPUT, "<$file" ) {

seek INPUT, $fptr, 0;

process_a_line( <INPUT> );

$$::job{'fptr'} = tell INPUT;

close INPUT;

} else {

workLogError( "Couldn't read input file \"$file\", job aborted!" );

workStopJob();

}

1;

}Random access

This example shows how to allow any line or lines in the file to be read during each main execution. This is not often required, but is difficult to do if needed and so is included here mainly for re-use purposes. In the init, it scans the file and creates an index file of byte-offsets for all the lines in the source file.

sub initFoo {

my $file = $$::job{'file'};

my $errors = 0;

# List the byte offsets of each line of the source file in an index file

my $offset = 0;

if ( open INPUT, "<$file" ) {

if ( open INDEX, "+>$file.idx" ) {

while ( <INPUT> ) {

print INDEX pack 'N', $offset;

$offset = tell INPUT;

$$::job{'length'}++;

}

close INDEX;

} else { $errors++ && workLogError( "Couldn't create and index file \"$file.idx\"" ) }

close INPUT;

} else { $errors++ && workLogError( "Couldn't open input file \"$file\"" ) }

# Report errors and stop job if error count non zero

if ( $errors > 0 ) {

workLogError( "$errors errors were encountered, job aborted!" );

workStopJob();

}

1;

}

sub mainFoo {

my $file = $$::job{'file'};

my $line = $$::job{'line'}; # the line of the source text we want to read this iteration

# Use index file to obtain the byte-offset into the source file of the specified line

open INDEX, "<$file.idx";

my $size = length pack 'N', 0;

seek INDEX, $size * $line, 0;

read INDEX, my $offset, $size;

$offset = unpack( 'N', $offset );

close INDEX;

# Read the line in the source file starting at the offset

open INPUT, "<$file";

seek INPUT, $offset, 0;

process_a_line( <INPUT> );

close INPUT;

1;

}Using the ImportCSV job type

The ImportCSV job-type is a generic job for importing CSV text files into wiki articles. It consists of the ImportCSV.pl Perl script to be required in /var/www/tools/wikid.conf, and the ImportCSV.php script which must be included in the wikis LocalSettings.php.

Each line in the files is a list of text fields separated by a tab character. The first line of the file is taken to be the names of the fields. Following is an example of some typical input data:

Title FirstName Initial Surname

Mr. Bob G. McFoo

Mrs. Sally H. Barson

Mr. Joe Bazberg

Dr. John Bizman

Miss Mary B. Buzby

The import procedure creates (or edits if it all ready exists) an article for each roe of the input file. For example the first row of the example input above may become an article called Mr. Bob G. McFoo and contain the following:

{{Person

| Title = Mr.

| FirstName = Bob

| Initial = G.

| Surname = McFoo

}}

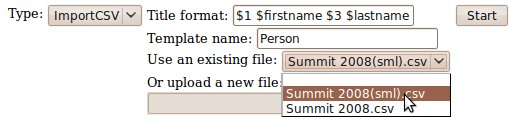

A few parameters need to be supplied to the ImportCSV job such as the source filename, the template to use (in this case "Person") and which fields to construct the article title from (In this case all four fields in order separated by spaces). Here is a screenshot of the form filled in to give the format shown above, note that the title format parameter can reference the columns either numerically, by name, or both.

- Note1 If the title format parameter is left empty, the article title will be a GUID

- Note2 multiple spaces are converted to single spaces in case any of the fields such as initial are empty

- Note3 named field references are case-insensitive

See also

- Wiki daemon

- Set up a new bot job

- wikid.pl - the Wiki Daemon script

- Extension:WikidAdmin - the code for the Wiki Daemon Administration special page