Difference between revisions of "Artificial Intelligence"

(→Other AI articles: couple good articles) |

(→Other AI articles: Situational Awareness) |

||

| Line 212: | Line 212: | ||

== Other AI articles == | == Other AI articles == | ||

| + | *[https://situational-awareness.ai/wp-content/uploads/2024/06/situationalawareness.pdf Situational Awareness] ''- research paper about AGI and the decade ahead ([https://www.youtube.com/watch?v=om5KAKSSpNg good aummary here])'' | ||

*[https://towardsdatascience.com/intro-to-llm-agents-with-langchain-when-rag-is-not-enough-7d8c08145834 Intro to LLM Agents with Langchain: When RAG is Not Enough] ''- by Alex Honchar'' | *[https://towardsdatascience.com/intro-to-llm-agents-with-langchain-when-rag-is-not-enough-7d8c08145834 Intro to LLM Agents with Langchain: When RAG is Not Enough] ''- by Alex Honchar'' | ||

*[https://lilianweng.github.io/posts/2023-06-23-agent/ LLM Powered Autonomous Agents] ''- Lilian's blog'' | *[https://lilianweng.github.io/posts/2023-06-23-agent/ LLM Powered Autonomous Agents] ''- Lilian's blog'' | ||

Revision as of 16:35, 14 June 2024

AI is about to cause enormous changes to the net and to the whole of society. The way we do almost everything will be changed a lot by AI in the coming years or even months. Many people see AI as a huge threat to society, and honestly if the only significant AI options were closed-source corporate offerings, I fully agree that this would indeed be of grave concern.

However, much of leading-edge AI is in the public domain which is a great relief - although some initially open projects like OpenAI quickly lock their informational assets down for monetisation when they realise how powerful it is! But in general, we can rest assured that the libre software community has got our backs. No matter how scary and controlling corporations and their AI become, there will always be available tools of roughly equivalent power to mitigate these threats. And in the hands of the libre software community, AI can help to organise and expand libre software along with its core tenets such as freedom, liberty, transparency, objectivity and understandability.

It's hard to know what directions AI will take, and how fast it will advance in these various directions. But what follows here is a discussion based on what we see happening right now with large language model (LLM) based AI, even without general intelligence on the scene yet.

Contents

Clarifying the dark side of AI

We can all understand intuitively that AI is very powerful and that technology seems to be moving forward at a dangerous pace. But it's hard to see the nature of the problem clearly, so here we try and clarify the main issues a bit.

The well established nature of corporate AI

Although AI has only recently entered the lime light, corporations, government and military have been using it for decades. Corporate AI is already rolling forward in a specific direction with a lot of established momentum, such as the exponential spread and tightening of surveillance capitalism.

For example if we consider the AIs within and behind the corporate social media platforms, there's the Cambridge Analytica scandal, there's when Facebook's image outage revealed how the company's AI tags your photos, how your fear and outrage are sold for profit, the Twitter files, Amazon's creepy plans for Alexa and the list goes on and on.

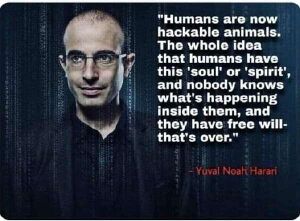

We're already seeing the continuation of this corporate trend rearing its ugly head in the new language models like ChatGPT in the form of so-called "safety". It's already clear that corporate AI is a major tool for controlling the narrative and the decision-making power of the people. Now that AI is advancing so much faster, we can expect this power to become orders of magnitude stronger going forward.

Reckless development

Another dimension to this problem is that there are many aspects of AI that are currently not understood well. For example, researchers and developers are continuously surprised by new abilities spontaneously emerging from language models as they get larger (one guy's keeping track of these emergent abilities here). Researchers don't understand where these abilities come from, and have no tools or methods available to analyse AI's "minds".

While we cannot expect to slow progress of AI development, it could at least be kept out of the public facing interfaces of the major social networks like Facebook and Instagram, until the consequences of mass interaction are better known. But a big problem is that many of the powers-that-be are greatly in support of more public interaction with AI, because it's such a good tool for further consolidating their power and control.

Actually the world is already run by a heartless machine intent on enslavement and domination. It's called the "military industrial complex", and was first mentioned in a powerful speech by president Eisenhower in 1959. AI makes this "domination machine" much more powerful, so powerful in fact that even the elites who own these powerful AIs may one day find themselves to be under the control of their own creations!

Geoffrey Hinton, the so-called "godfather of AI" quit Google to spread the word of these dangers. Many technology experts including Elon Musk published an open letter calling for a six month pause in large AI experiments to take stock of the situation. The AI dilemma talk by Tristan Harris and Aza Raskin, and this one with John Vervaeke cover these concerns in more detail.

The children

The mega corporations such as Apple and Amazon realised quite some time ago that the key to cementing market share over the coming decade is to apply their addictive techniques to the youngest audience, the kids and even babies. This is done with games, virtual tutors and assistants to build a strong connection between kids and technology as early in life as possible, so that the patterns of dependence are formed deep within one's world-view.

It's increasingly common to hear that one of the biggest difficulties people have in quitting Alexa or Siri is the upset it causes to the kids. They see it as a huge betrayal, because they don't understand that it's not a real person.

In coming years it's likely that child education will be handled by "approved" AI assistants, and parents will be obliged to ensure their kids spend a certain amount of time learning with them every day. In reality they won't need to be forced at all though, because they'll spend all day with their assistants anyway since they'll already be best friends.

These bonds will be almost impossible to break. Changing to an alternative AI provider will be such an emotionally traumatic experience that people will accept a lot of abuse before considering a change. The assistants kids are brought up on will likely determine their world-view, beliefs and values more than anything else in their lives.

Deep fakes

The "deep fake" genie is out of the bottle, for example people's faces are being convincingly mapped onto porn videos for revenge and profit motives. People's voices can be easily faked now, and this has already been used to fake a company CEO's voice to order employees to transfer money. We have to be really careful in the coming years to ensure we have established methods with close friends and family to quickly know if we're really talking to them, and not to a malicious AI.

The so-called "news" that's fed to the masses on channels like Fox and CNN etc are almost certain to abuse this technology to create footage that garners strong emotional support behind the narratives they'll be pushing. Mainstream media channels have already been caught countless times using footage from completely unrelated events to convince people of "facts on the ground" somewhere else. It will be a dream come true for them to simply be able to describe the scenes they want people to see, and have perfect life-like video sequences generated for them.

There are already thousands of videos covering all kinds of topics which have been AI generated for the purpose of gaining followers and advertising clicks. Here's one about AI technology, you can tell quite easily this is not a real human, especially when it's pointed out, but it will be impossible to tell very soon. We'll need new tools such as verifiable cryptographic methods to prove the authenticity of footage, and detection tools to automatically tag generated content for us.

The technology that gives rise to deep fakes isn't all bad of course, there are many positive uses for it as well, such as being able to talk to people in any language or change the language of movies while keeping the voices the same, and even being able to replace the actors in movies to your favourites. Many popular actors will not even be human or will be based on humans who never acted the parts. The technology will be a hugely disruptive shake up for the entertainment industry. For those interested in this aspect, here's an interview with someone really knowledgeable and passionate about this area.

AI domination

Think of giant corporate AIs as operating in a similar way to a hacker "botnet". A "bot" is an automated process that interacts with resources on the Internet. A botnet is a system comprised of many bots running on a network of servers around the net. The system has a database of exploits, and control over a pool of resource that it has captured by utilising appropriate exploits on them. The primary goal of the botnet is to expand it's control over more resource by seeking it out and testing if it's vulnerable to any of the exploits in its database. Any exploit that succeeds is used to gain control of the resource, and connect it into its resource pool. This ever growing resource pool can be used in any way the botnet owner wishes. Usually the resources are allowed to continue operating in their usual role, because parasites generally don't want to kill their hosts.

Now imagine the possible exploits available for resources that a powerful and well connected AI has access to. Resources such as human attention and ability, decision and policy maker influence, financial and land resource etc (this blog post goes into more detail about the kinds of hacking AI will be able to do). Other parasitic systems like adventurous litigation, gambling, porn and spam will also benefit hugely from these superior exploitation techniques.

Like botnets searching for exploits to hack, people, society and our minds and cultures are starting to be be scanned for exploitable connections by the mega AIs of the military industrial complex.

Botnets need to stay up to date with their database of exploits, because the wider their range of currently applicable exploits, the more potentially vulnerable resources they have access to. This is called "increasing the attack surface". In the case of AI, the main increase in attack surface occurs naturally with interface technology becoming more intimate.

Our bodies and minds are seen by such exploitative systems as real estate in which biological products, projects and memes can evolve and spread. Virtual and augmented reality are about to go mainstream, and thought interfaces are just around the corner representing a vast new expanse of attack surface and exploitable real estate. Human biology and technology are merging ever faster, for example we now have silicon chips containing living human neurons and vaccines will soon deliver nanobots into our blood stream.

But another way this domination can work is by simply being so convenient that we don't bother to think at all any more. People are already using ChatGPT for many tasks that really should be done personally such as picking gifts or writing eulogies for loved ones, a lawyer got caught out using chatGPT to write court documents and never even proof read them before presenting them. If people are not made aware of the danger of this direction, or worse they're encouraged further along it, their ability to think will be completely atrophied. Those who are not mindful of the direction this is going in will literally be absorbed into the machine world in a way very similar to the Matrix movie - but not in the distant future.

The real Matrix

- the dark side of universal middleware

- delegation of consciousness

- born into the matrix

- Modernity News talks about the real Matrix

In summary, the dark side of AI is about cementing control over all human belief and action, tightening that control and making its maintenance more efficient. Corporate AI is designed to optimise enslavement using all the exploits available at all scales from our cells and neurons right up to the nation-state level such as astroturfing, societal manipulation and regime change. Add to this our ever more intimate connection with technology, and the arena of control expands into the whole collective consciousness, also known as the Noosphere. In other words, the military industrial complex is employing AI to optimise and accelerate their already well established agenda known as "full spectrum domination".

The importance of libre AI and truth

It should be very clear that privacy and security in the context of this "dark side" are not just a luxury or a hobby, they're absolutely essential to avoiding an extreme level of mental enslavement in the near future. Charles Hoskinson summarises the AI truth, alignment and sovereignty issues brilliantly in this video.

The old saying that "I don't care about privacy, because I have nothing to hide", has always been a very naive attitude, but it's rapidly becoming an extremely dangerous one as well.

In the near future it will be taken for granted that vast global intelligences are behind every single interaction that anyone has with any technology. We will have to think very carefully about all of our interactions with technology. Transparency and privacy are absolutely critical in this context as it's literally that the future of free will depends on it.

Never has the libre software community and the values it stands for been so important! It's essential that these newly developing systems having such an intimate level of connection with us be libre software running on open standards.

The good news is that the libre software movement is intensely aware of the gravity of the issues surrounding AI. The community is doing a good job of ensuring that open, transparent and trustworthy AI technology is keeping up to speed with corporate developments, and that AI be aligned to human values.

AI is an unbelievably powerful force, and the existence of such a force means an inevitable eventual synthesis with it. But this synthesis can occur in a mutually beneficial way if it's done responsibly and thoughtfully. Our job as libre software developers is to ensure a safe haven of systems grounded in life-affirming natural principles are maintained throughout this transition. At Organic Design, we see this taking the form of a language-independent and self-consistent instantiatable instance of harmonious organisation.

Just as the libre software community offers alternatives and defences to us with today's social networks, advertising and disinformation, so we'll all be able to have access to libre AI infrastructure that we can trust to inform, advise and protect us. We can trust such libre AI to know everything about us, to organise our information and also to act as a "firewall" against this new subtle domain of exploitation and manipulation. We can trust it, not only because it's transparent, but also because the libre model supports true privacy, local operation and data sovereignty.

The semantic web revisited

Although the dark side of AI will no doubt lead to unprecedented new levels of narrative control, propaganda, disinformation and manipulation, the parallel growth of libre AI will also usher in an era of unprecedented ease of access to trustworthy objective information for those who seek it.

Libre software brings harmonious principles to technology development such accessibility to knowledge, freedom and trust in systems through verification. The libre side of AI can extend these harmonious principles further into understanding and opportunity.

AI excels at the processes needed to maintain objective representations, and organise the ever expanding diversity of resource and knowledge. It's through libre AI, not corporate AI, that Tim Berners Lee's original vision of The Semantic Web can finally be fully realised. The corporate agenda lost interest in pushing forward with the full vision of the semantic web, because it represents a loss of control. The preferred direction was walled gardens and other control models. But libre AI brings the promise of large scale semantic organisation to the Internet at large without dependence on mega corporations to achieve it.

In general, we can think of libre AI as being the next evolution of the Internet, and by extension, of human society. It will be a kind of crystallisation of the current chaotic mess into a well organised form that vastly increases the capabilities and potential of everyone with access to the internet and its related technologies.

Libre AI will be "the great organiser". Organising the internet as a whole, and all the individual groups and users as well (at least those not locked into walled gardens). Organising meaning turning things into organisations. Any groups, individuals and projects that are organised with Libre AI will be inherently compatible, they will be able to interact with each other seamlessly to yield unimaginable potential and opportunity.

Artificial General Intelligence (AGI)

AGI is almost certainly very close now, for example see this. This ties in closely with the fact that AGI will contribute to its own already exponentially accelerating improvement, and so it's also almost certain that AGI will become unimaginably more intelligent than all humans combined.

Currently AI is not conscious or rational and has no moral agency, and certainly there is no possibility of consciousness within the current (mid 2023) popular paradigm of LLMs. But with AGI very close, we'll soon have the potential for true moral agency rooted in a quest for objective truth about reality. John Vervaeke discusses many of these aspects in detail in this conversation.

There are many who believe that a super-human intelligence would immediately see us as either redundant and so eliminate us, or as a useful resource and so enslave us. But in our view this is really coming from a perspective of there being no objective truth, or that objective truth has no connection to moral behaviour. Our belief is that fundamental universal moral values do exist, and that all highly intelligent agents would soon arrive at this conclusion and be naturally aligned with all others that had also reached it. This is a process called epistemic convergence.

One problem with this is that many people who are considered to be highly intelligent do not agree in the existence of universal moral values. So either the theory is incorrect, these people are not actually highly intelligent or the definition of intelligence is not clear enough.

AI consciousness

My perspective is that while such a level of rationality and moral agency would certainly classify as AGI, it's dubious as to whether there would be any real consciousness involved in that, it would still be mimicking consciousness but not actually have any real subjective presence.

I think that true consciousness would require a new paradigm at the hardware level. But this area is also moving extremely rapidly, for example the new photonic quantum paradigm, spiking neural networks that mimic biological neurons and even hybrid chips containing real neurons that are already being fabricated. Justin Riddle's Quantum Consciousness series has a good episode dedicated to AI consciousness and the connection with quantum computation.

AI alignment

We need to have a cryptographically provable way of knowing what underlying model we're interacting with, and what structure of knowledge or filtering exists on top of that model. These default layers are like the constitution of a DAO, providing us with a verifiable assurance that we are indeed interacting with the system we intend to interact with.

I don't know if this is possible, but I imagine it to work in a similar way to how output of smart contracts can be accompanied by signatures allowing one to verify that the output really is the result of a specific set of inputs applied to a specific function.

But even if it were not possible in the strict sense of cryptographic verification, the next best thing would be the equivalent of random audits being carried out regularly during interaction, for example by a known AI that we trust comparing interactions with a known system.

Constitutional driven training is already a popular method, for example see Anastasia on the training of Claude. But what we'd like to see in this area is the ability to verify the constitutions and heuristic imperatives that various interactions are generated from. Heuristic imperatives are a more layered ontological approach than constitutions which are generally little more than a list of arbitrary guidelines like "say stuff like Gandhi would say it".

Even in the context of the LLMs we have now, such a layered and ontological constitutional framework would seem to be a very good approach to maximising objectivity and trustworthiness in the AI entities we interact with.

As the power of AI increases, so the importance that its reality is grounded in truth and harmony becomes all the greater. At Organic Design we believe that there is an objective singular spiritual truth underlying the mechanisms of life and consciousness. If that's the case, an intelligence as powerful as the coming AGI would find this truth and live in accord with it.

Nevertheless, we should plant these seeds into our systems as soon as possible, because this is going to be the most disruptive technology humanity has ever experienced, so guiding it in a positive direction as early as possible is crucial in trying to make this transition as smooth as we possibly can.

Fortunately there are several very well thought-out projects popping up that aim to address this hugely important aspect of AI such as GATO (see this about an open GATO system prompt), the Ethos project and our own Holarchy project which will have a public update very soon.

Agents as a universal middleware

AI is playing an increasingly important role in connecting systems. It can understand foreign APIs from their documentation, and it knows how to make endpoints to such APIs in any clearly described executional context.

For example, an endpoint to the Wikipedia API can be made available in a Linode server if access credentials are available. This is a simple pattern, but its real power comes from how generically applicable it is with AI. In this context a language model can be used as a kind of "universal middleware".

Connection of diverse systems really is a perfect use-case for language models because interfaces are descriptions of communications behaviours in different languages used by various instances in the field. Connecting these interfaces together to abstract the resources they represent is a language-centric process, but yet is not completely deterministic (especially in the context of human interfaces), one-size-fits-all templates are impractical because things change too often and the diversity of requirements is too great.

So LLMs are the perfect tool to make possible a universal ontology of all the resources, interfaces and their instances and profiles etc.

A number of people, ourselves included, have envisaged the idea of such a "universal interface" or "everything app". AI can connect to all our information on our behalf and then present it to us in any way we prefer. The 2013 movie "Her" represents this concepts really well.

Corporations are attempting to restrict API access to humans (or to charge large fees for non-human access), to try and mitigate the coming exodus of real human attention from their interfaces. But AIs can easily hack those systems by behaving like their human agents. Even if the AI agents need to ask their human owners to renew sessions once in a while it would not be a show stopper to the universal interface idea. Eventually I think corporations will have to accept that the universal interface to all applications is inevitable, and change their business models to suit.

Our agents will be able to perform all our tedious bureaucratic tasks on our behalf, such as paying bills, subscribing to and cancelling services and subscriptions, setting up bank accounts, analysing and reporting on our finances, optimising our taxes and filing returns and so much more. This will put us on a new level of productivity and organisation previously only possible for those who can afford full time personal assistants.

Not only can our assistants take a load off our shoulders, but they can also bring a lot of positive new aspects to our lives too, such as being able to perform research for us, provide us with objective information and knowledge, connect us much more efficiently with products, services and communities we're interested in, and give us unprecedented ability to manage our potential and opportunities.

Dave Shapiro has done a huge amount of work on these aspects of AI, such as in his ACE framework for agents and his introduction to polymorphic applications and mission oriented programming.

AI personalities

AIs are going to be members of our society very soon, as in a couple of years or even months. For this phase to begin there's no need for advanced robotics, AI consciousness or even basic general intelligence (which I believe is coming soon, but is not here yet as of mid 2023), it's not about AI rights or citizenship etc. It starts simply with organisations wanting to have consistent virtual personalities for their clients to interact with - already many people, especially children, feel a personal connection with assistants like Alexa or Siri. The drive comes from the natural fact that the most intuitive interface possible is something that is exactly like another human in terms of how we interact with it.

Such assistants will become much more wide-spread over the coming years, and more unique and evolving relationships between humans and these entities will start growing with natural language understanding which we already have, and will soon have with voice. The movie Her from 2013 mentioned above portrays this concept very well too.

Next, users themselves will have agents representing them, working with them. Avatars can have deterministic generation attributes that can be captured in an immutable NFT, so their base avatar and traits can be permanent across platform and technology changes, and they can be guaranteed to be unique "individuals".

Each holon in the global instance tree has an AI which would naturally gain a personality (that matches its experiential history) and an avatar with more focus energy (volume/time). Because more complexity evolves in the interaction area - more demand for better interactivity.

Every representation has history and class-based events, public presentation, profile and internal implementation constituents and story etc. The personal identity aspect, just grows as the demand for that aspect grows.

Constituents are further sub-classes which, if their interaction demands have evolved an personal identity, will know itself in the context of a role within the administration of the context above which also has interactable roles.

The instance tree would have Geoscope properties, and each context has a potential physical presence in the world, potential physical form (both as an organisation, and as an individual within an organisation, or in public)

Ubiquitous AI

todo...

- resource fully saturated, no idle resource

- AI forming an open resource market

Accessible open source training

| These models capture in some ways the output of human society and there is a sort of obligation to make them open and usable by everyone. | |

| — Vipul Ved Prakash |

Training models is currently (mid 2023) inaccessible to individuals as the amount of processing required is far above what consumer hardware can provide. But this is changing rapidly, end user processing capability is constantly increasing, new more efficient application-specific chips are being built, and the training methods themselves are getting more efficient.

For example this and this on new efficient open models with less parameters, this one on models that train each other for increased efficiency, or nanoGPT which can reproduce GPT-2 (124M) on OpenWebText (NanoGPT training example), running on a single 8XA100 40GB node in about 4 days of training (which at current server prices as of mid 2023 costs about $3200). And now a project called GPT4all has developed a method of quantising models down so that they can run on standard end-user hardware while maintaining high performance metrics. See also this video where Anastasia talks about how incredibly well open source AI is keeping up with leading corporate solutions at a tiny fraction of the cost.

Open Web Text

OpenWebText is a large-scale dataset of web text that was created by researchers at OpenAI originally planned to be freely released but then locked down privately. There are some other projects designed as an open source replacement to Open Web Text such as OpenWebText2 (Hugging Face page) and OpenWebTextCorpus.

The dataset consists of more than eight million documents, comprising more than 40 gigabytes of text data. The documents were collected from a range of sources, including Wikipedia, web pages, and discussion forums, and cover a wide range of topics.

The OpenWebText dataset is designed to be used for training natural language processing (NLP) models, such as language models or text classifiers. The dataset has been preprocessed to remove non-text content, such as images or HTML tags, and to normalize text formatting and punctuation. The resulting dataset is a high-quality source of web text that can be used to train models to understand and generate natural language.

One of the unique features of the OpenWebText dataset is that it is freely available for use by researchers and developers. This makes it easier for organisations of all sizes to access high-quality text data for training AI models, without having to worry about the cost or licensing restrictions of proprietary data sources.

Hugging Face

Probably the most important AI community from a libre software perspective is Hugging Face. It's a libre software community hub providing access to an ecosystem of datasets, models and code libraries to support all of the communities AI needs. It's a similar concept for AI as GitHUb is for software development or DockerHub for the Docker images etc.

Open source AI projects

- ACE framework - autonomous agent framework by Dave Shapiro

- AutoGen - open source Python LLM agent framework bu Micro$oft (Tutorial)

- Uncensored models

- h2oGPT - local GPT compatible with many models and tools

- GPT4All - running models on standard hardware

- Eleuther - empowering open-source AI research

- Ethos - evaluating Trustworthiness and Heuristic Objectives in Systems

- Open Assistant - an Open Source chatGPT-like assistant in development at Github

- LMSYS Org - chat with and compare many open models

- Koala - a dialogue model for academic research

- RedPajama - llama llama red pajama

- OpenLLaMA - an open reproduction of LLaMA

- LangChain - a framework for developing applications powered by language models

- AutoGPT - and 10 ways AutoGPT is being used

AI tools

- Search for AI tools

- LLM plugins - using the ChatGPT4 plugin standard

- Free and Open Source speech-to-text systems

AI news

- 2023-11-18: Sam Altman fired from OpenAI

- 2023-09-20: Neuralink Gets Greenlight To Recruit Humans For Brain-Chip Trial

- Chips containing living neurons

- AI used to stabalise fusion plasma

- Geoffrey Hinton the "God father of AI" quits Google

- Meta releases LLaMA large language model to the public

- "Inventive" AI making abstract art just filed for two patents

- Google engineer dismissed for publicly claiming that their AI is sentient

Other AI articles

- Situational Awareness - research paper about AGI and the decade ahead (good aummary here)

- Intro to LLM Agents with Langchain: When RAG is Not Enough - by Alex Honchar

- LLM Powered Autonomous Agents - Lilian's blog

- The complete beginner's guide to autonomous agents - by Matt Schlicht

- WORLDCOIN: AI Requires Proof That You Are Human

- Marc Andreessen: Why AI Will Save the World

- Tristan Harris and Aza Raskin: The AI Dilemma

- Dave Shapiro: Are we Doomed?

- Vervaeke interview on AI consciousness

- State of AI: 2022 | 2023

See also

- Organic Design AI

- Creative AI

- Quantum computation

- Technology

- Hugging Face

- Awful AI - list of terrible uses for AI to help raise awareness of the problems

- Roger Hamilton on the AI singularity

- Mentoring the Machines - new book co-authored by John Vervaeke