Difference between revisions of "Configure wiki security"

(i18n) |

(Change source-code blocks to standard format) |

||

| (4 intermediate revisions by one other user not shown) | |||

| Line 1: | Line 1: | ||

| − | + | {{legacy}} | |

| + | |||

== Error reporting == | == Error reporting == | ||

Error details should only be sent to sysops. A plain error message should be presented to all other users. The following can be added to ''LocalSettings.php'' to set this up. This snippet refers to an ''error.php'' file such as [http://svn.organicdesign.co.nz/filedetails.php?repname=extensions&path=%2Ferror.php this one]. | Error details should only be sent to sysops. A plain error message should be presented to all other users. The following can be added to ''LocalSettings.php'' to set this up. This snippet refers to an ''error.php'' file such as [http://svn.organicdesign.co.nz/filedetails.php?repname=extensions&path=%2Ferror.php this one]. | ||

| − | + | <source lang="php"> | |

ini_set( 'display_errors', 'off' ); | ini_set( 'display_errors', 'off' ); | ||

$wgShowExceptionDetails = false; | $wgShowExceptionDetails = false; | ||

| Line 26: | Line 27: | ||

include( dirname( __FILE__ ) . '/error.php' ); | include( dirname( __FILE__ ) . '/error.php' ); | ||

exit; | exit; | ||

| − | }</ | + | } |

| + | </source> | ||

| Line 32: | Line 34: | ||

Because this is not launched from ''index.php'' and the exception occurs early on, the normal error-handling settings are ignored and information returned to the client. On security sensitive sites I patch the ''includes/Exception.php'' file and add a constructor which simply dies as follows: | Because this is not launched from ''index.php'' and the exception occurs early on, the normal error-handling settings are ignored and information returned to the client. On security sensitive sites I patch the ''includes/Exception.php'' file and add a constructor which simply dies as follows: | ||

| − | + | <source lang="php"> | |

class MWException extends Exception { | class MWException extends Exception { | ||

function __construct() { die; } | function __construct() { die; } | ||

| − | </ | + | </source> |

== System messages == | == System messages == | ||

Another small issue that can be tightened up is that MediaWiki gives specific information about users in its login errors. It says whether or not a user exists, by reporting the "there's no user named 'foo' in the wiki", or "Incorrect password entered for user 'foo'". A more secure ''i18n'' file can be added to override these messages with something more generic, | Another small issue that can be tightened up is that MediaWiki gives specific information about users in its login errors. It says whether or not a user exists, by reporting the "there's no user named 'foo' in the wiki", or "Incorrect password entered for user 'foo'". A more secure ''i18n'' file can be added to override these messages with something more generic, | ||

| − | + | <source> | |

| − | 'nosuchuser' => "The supplied credentials were not valid", | + | 'nosuchuser' => "The supplied credentials were not valid", |

'nosuchusershort' => "The supplied credentials were not valid", | 'nosuchusershort' => "The supplied credentials were not valid", | ||

| − | 'wrongpassword' => "The supplied credentials were not valid", | + | 'wrongpassword' => "The supplied credentials were not valid", |

| − | </ | + | </source> |

== Protecting files == | == Protecting files == | ||

| Line 51: | Line 53: | ||

Unfortunately this method seems to have a problem with [[Friendly URL's]], I had to patch the ''img_auth.php'' script so that the title part of the PATH_INFO would be extracted properly. | Unfortunately this method seems to have a problem with [[Friendly URL's]], I had to patch the ''img_auth.php'' script so that the title part of the PATH_INFO would be extracted properly. | ||

| − | + | <source lang="php"> | |

| + | $matches = WebRequest::getPathInfo(); | ||

$path = $matches['title']; | $path = $matches['title']; | ||

// Codebase hack - title not extracted properly for ArticlePath = "/$1" | // Codebase hack - title not extracted properly for ArticlePath = "/$1" | ||

| − | $path = preg_replace( '|^.+/img_auth.php|', '', $path );</ | + | $path = preg_replace( '|^.+/img_auth.php|', '', $path ); |

| + | </source> | ||

== Apache configuration == | == Apache configuration == | ||

The rewrite rules are much simpler when using the above file protection technique because thumbnails and full images are treated the same way and are covered by the rule that handles normal script access within the ''/wiki'' directory. Also some additional directory rules should be added to restrict file-browsing. | The rewrite rules are much simpler when using the above file protection technique because thumbnails and full images are treated the same way and are covered by the rule that handles normal script access within the ''/wiki'' directory. Also some additional directory rules should be added to restrict file-browsing. | ||

| − | + | <source> | |

| + | RewriteEngine On | ||

RewriteCond %{REQUEST_URI} ^/wiki/ | RewriteCond %{REQUEST_URI} ^/wiki/ | ||

| Line 70: | Line 75: | ||

Order Deny,Allow | Order Deny,Allow | ||

Options All -Indexes | Options All -Indexes | ||

| − | </Directory></ | + | </Directory> |

| + | </source> | ||

Some additional directives can be added in the general configuration outside the virtual-host context to prevent the server giving away useful configuration information, | Some additional directives can be added in the general configuration outside the virtual-host context to prevent the server giving away useful configuration information, | ||

| − | + | <source> | |

ServerSignature Off | ServerSignature Off | ||

ServerTokens ProductOnly | ServerTokens ProductOnly | ||

TraceEnable off | TraceEnable off | ||

| − | </ | + | </source> |

And also it's a good idea to disable the [[w:WebDAV|WebDAV]] module since this allows an enormous amount of information and methods to be accessible to a potential attacker. The main thing it's used by is [[Subversion]] over HTTP, but we do all our Subversion interaction over SSH so we don't need WebDAV at all. Disable all dav-related modules by removing the sym-links in the Apache's mods-enabled directory, | And also it's a good idea to disable the [[w:WebDAV|WebDAV]] module since this allows an enormous amount of information and methods to be accessible to a potential attacker. The main thing it's used by is [[Subversion]] over HTTP, but we do all our Subversion interaction over SSH so we don't need WebDAV at all. Disable all dav-related modules by removing the sym-links in the Apache's mods-enabled directory, | ||

| − | + | <source> | |

rm /etc/apache2/mods-enabled/dav* | rm /etc/apache2/mods-enabled/dav* | ||

/etc/init.d/apache2 restart | /etc/init.d/apache2 restart | ||

| − | </ | + | </source> |

== Basic security testing == | == Basic security testing == | ||

| Line 97: | Line 103: | ||

== See also == | == See also == | ||

| + | *[http://www.hardened-php.net/suhosin/why.html About the Suhosin PHP extension] | ||

*[https://www.owasp.org/index.php/PHP_Security_Cheat_Sheet PHP Security Cheat Sheet] ''- on the OWASP wiki'' | *[https://www.owasp.org/index.php/PHP_Security_Cheat_Sheet PHP Security Cheat Sheet] ''- on the OWASP wiki'' | ||

*[http://www.kyplex.com/docs/apache-security.html 10 Basic Apache hardening tips] | *[http://www.kyplex.com/docs/apache-security.html 10 Basic Apache hardening tips] | ||

Latest revision as of 18:11, 22 May 2015

Contents

Error reporting

Error details should only be sent to sysops. A plain error message should be presented to all other users. The following can be added to LocalSettings.php to set this up. This snippet refers to an error.php file such as this one.

ini_set( 'display_errors', 'off' );

$wgShowExceptionDetails = false;

$wgShowSQLErrors = false;

set_exception_handler( 'wfDisplayError' );

set_error_handler( 'wfDisplayError' );

$wgExtensionFunctions[] = 'wfErrorReporting';

function wfErrorReporting() {

if( in_array( 'sysop', $wgUser->getEffectiveGroups() ) ) {

ini_set( 'display_errors', 'on' );

ini_set( 'error_reporting', E_ALL );

$wgShowExceptionDetails = true;

$wgShowSQLErrors = true;

}

}

function wfDisplayError() {

global $wgOut;

$wgOut->disable();

wfResetOutputBuffers();

$code = 500;

include( dirname( __FILE__ ) . '/error.php' );

exit;

}

This works for most cases, but there are some other cases where security information can still get dumped to the client, for example when malformed parameters are passed to load.php such as load.php?lang=%3C%3E&modules=jquery. Combinations of this type of URL can yield a huge amount of information about the files and paths available to scan for vulnerabilities.

Because this is not launched from index.php and the exception occurs early on, the normal error-handling settings are ignored and information returned to the client. On security sensitive sites I patch the includes/Exception.php file and add a constructor which simply dies as follows:

class MWException extends Exception {

function __construct() { die; }System messages

Another small issue that can be tightened up is that MediaWiki gives specific information about users in its login errors. It says whether or not a user exists, by reporting the "there's no user named 'foo' in the wiki", or "Incorrect password entered for user 'foo'". A more secure i18n file can be added to override these messages with something more generic,

'nosuchuser' => "The supplied credentials were not valid",

'nosuchusershort' => "The supplied credentials were not valid",

'wrongpassword' => "The supplied credentials were not valid",Protecting files

MediaWiki has a script called img_auth.php which is used to allow files to be protected. Requests to the image files are made via the img_auth.php script instead of into the image file structure, and the files are stored outside of web-accessible space. More information about the configuration can be found at MW:Manual:Image Authorization.

The setup is quite simple and just involves setting $wgUploadDirectory to the internal absolute location of the images, and $wgUploadPath to the external location of the img_auth.php script.

Unfortunately this method seems to have a problem with Friendly URL's, I had to patch the img_auth.php script so that the title part of the PATH_INFO would be extracted properly.

$matches = WebRequest::getPathInfo();

$path = $matches['title'];

// Codebase hack - title not extracted properly for ArticlePath = "/$1"

$path = preg_replace( '|^.+/img_auth.php|', '', $path );Apache configuration

The rewrite rules are much simpler when using the above file protection technique because thumbnails and full images are treated the same way and are covered by the rule that handles normal script access within the /wiki directory. Also some additional directory rules should be added to restrict file-browsing.

RewriteEngine On

RewriteCond %{REQUEST_URI} ^/wiki/

RewriteRule (.*) $1 [L]

RewriteRule (.*) /wiki/index.php$1 [L]

<Directory />

AllowOverride None

Order Deny,Allow

Options All -Indexes

</Directory>

Some additional directives can be added in the general configuration outside the virtual-host context to prevent the server giving away useful configuration information,

ServerSignature Off

ServerTokens ProductOnly

TraceEnable off

And also it's a good idea to disable the WebDAV module since this allows an enormous amount of information and methods to be accessible to a potential attacker. The main thing it's used by is Subversion over HTTP, but we do all our Subversion interaction over SSH so we don't need WebDAV at all. Disable all dav-related modules by removing the sym-links in the Apache's mods-enabled directory,

rm /etc/apache2/mods-enabled/dav*

/etc/init.d/apache2 restartBasic security testing

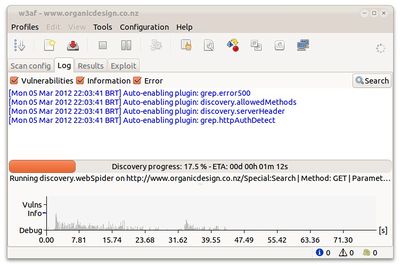

Most test-suites for testing web-application security are very extensive and time-consuming to set up, configure and run. But there's a couple of good applications for those of us who don't really specialise in security but need a quick way to test that our sites are reasonably water-tight.

The first is Web Application Attack and Audit Framework (W3AF) which is known within the open source community to be intuitive but also very thorough. It's stated goal is to create a framework to find and exploit web application vulnerabilities that is easy to use and extend. W3AF is available for all platforms and can perform scans over HTTPS. It installs on Debian/Ubuntu via apt-get and has a GUI which can be launched with the command w3af_gui. It's then a simple matter to set up various profiles and run them against selected URLs.

Another good one is Zaproxy. It runs on all platforms and installs an HTTP proxy server that you connect your browser through. I don't think the basic free package can do HTTPS connections so you may have to temporarily disable HTTPS on your site during testing. Once you have it running (on Ubuntu, just download, unpack and run zap.sh from the desktop. Lanuch your browser and connect through Zap (on localhost:8080 by default). You can then go to your web-site in the browser to give Zap the address, then start the test in Zap from its toolbar - not quite as straight forward as w3af, but you'll still be up and auditing in a few minutes from download :-)