Libre software (blog)

Fixing my laptop microphone[edit] |

| Posted by Nad on 17 February 2019 at 00:02 |

|---|

This post has the following tags: Libre software

|

| Last year I got a Purism Librem 13 laptop which is made for Linux and is very privacy focused hardware. But about a month after I got it, the microphone stopped working. It wasn't a problem with the built in kill switch that allows you to completely disable the camera and microphone at hardware level, because it still activated and deactivated the camera properly. I took it in to some laptop specialists we know in Caxias who are very reliable, and they said that the problem was most likely that the wire had come off the microphone since all the connections on the main board were fine. This is a big problem because, the microphone is next to the camera above the screen and is very inaccessible, so I left it because I didn't want to send it all the way back to the US which would take months and probably also involve a huge tax when it returned into Brazil!

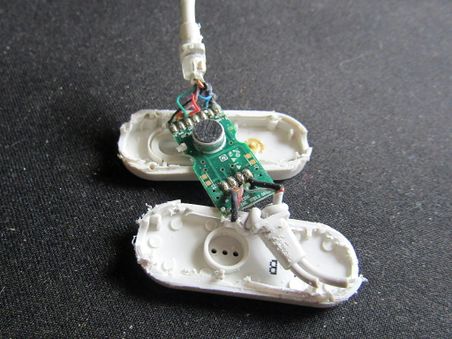

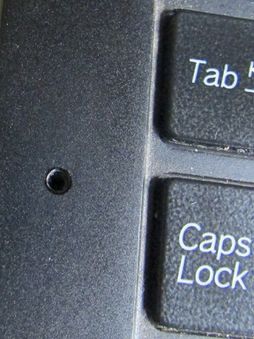

But then we got another Purism for Beth, and after about a month of use exactly the same thing happened!!! We asked the Purism engineers if they could explain better how to access the microphone so that maybe our laptop guys could have a go at fixing them. But then later an idea occurred to me - why not just replace the microphone with another one that's in a much more convenient location? So first I located the connectors which Purism show clearly on their site here. It looked a little different on my model as can be seen in the first photo below, but it seemed like it would be doable as there was a convenient space right next to the connector where I could fit a new microphone that would be to the left of the keyboard which would do just fine. Next I pulled apart some old unused earphones that had a microphone on them, and removed the microphone and some of the wire.

And that's all there was to it, it works really well! I should really have connected the kill switch up to the new microphone, but I decided to quit while I was ahead because my eye sight's too bad and my soldering iron's too big! Later I'll take both our laptops in to our guys in Caxias to fix Beth's and get both kill switches working. As a final finishing touch I countersunk the hole a little to tidy it up a bit and dabbed a black permanent marker on it to make it a bit more subtle :-) |

ContentsMastodon[edit] |

| Posted by Nad on 28 October 2018 at 14:44 |

|---|

This post has the following tags: Libre software

|

| Mastodon is a free, open-source social network server based on ActivityPub. Follow friends and discover new ones. Publish anything you want: links, pictures, text, video. All servers of Mastodon are interoperable as a federated network, i.e. users on one server can seamlessly communicate with users from another one. This includes non-Mastodon software that also implements ActivityPub such as GNUsocial, Friendica, Hubzilla and PeerTube! The easiest way to get started on Mastodon is to join one of the existing instances, but here at OD we're running our own to get familiar with it all which we're documenting here.

Mastodon is federated which means that you can interact with users who reside on other servers that also use the ActivityPub protocol. Users can export their data including connections and toots, and can easily move everything over to a new instance at any time. It's becoming increasingly clear that corporate controlled "walled gardens" like Twitter and Facebook are not worth spending time and effort building up a following in since they can delete accounts at any time for whatever reasons they like, including simply not falling in line with the mainstream narrative. Personally I never had much of a following on Facebook or Twitter - it felt like a futile things to do since all the data I built up was in somebody else's hands, but in the "fediverse" (the universe of connections using the AcitviyPub protocol) you control all the information that composes your posts and connections yourself. Mastodon is similar to Twitter but has some differences. It uses "toots" instead of "tweets", and favourite stars instead of Twitter's hearts or Facebook's likes. Instead of retweets, Mastodon uses a concept called boosting which works the same way except that you can't add you own text to the boosted message. This has been done deliberately so that only the original message's intent gets spread and trolling in the network is reduced. To mention a remote user in a toot you need to also include their domain, such as @fred@example.com. Another thing they've done differently is that the favourite and reply count is not shown, they didn't want to create the competitive rating behaviour on toots which I guess I can understand. Here's @Gargron talking about the reasoning behind this. To follow a remote user, you go to the user's profile page on their server and follow them there, that brings up an option to enter your @name@server ID so the remote server can request the follow action from your local server that you're logged in to. To unfollow a remote user, you can do it from the follow notification if they've followed you as well, otherwise you need to block and unblocking them. For a more detailed look into how to use Mastodon and why it's been made the way it has, see this excellent introductory guide by @kev@fosstodon.org. The rest of this article will focus on the installation and administration of a Mastodon instance. General architecture[edit]The main application is written in Rails and runs in the "web" container. There are also two other containers for the application, "streaming" which handles the long-term web-socket connections, and "sidekiq" for background processing such as mailing and push notifications. The main database is postgresql, but there's also a redis database which is heavily used throughout the application and is best backed up as well even though the loss of its data can be survived. The web-server is not in the Docker containers, instead it's expected that your main web-server will reverse proxy into the ports exposed by the application containers. Installation[edit]Mastodon has a lot of dependencies that we don't have installed on our server such as PostgreSQL and Ruby, so for us the Docker image is definitely the preferred route, but it's till quite complicated and needs to be done via Docker Compose. This is our procedure which is based on the official installation. Clone the Docker repo[edit]First, create a mastodon group with number 991 which is used by the project, then create a directory for the persistent data that will be used by the containers (we're putting our repo and data in /var/www/domains along with other web applications - this is not under our document root!), clone the Mastodon Docker repo and checkout the latest stable version. Dockerfile[edit]The Dockerfile has a chown -R command in it that takes up to an hour to run whenever docker-compose build is run. This issue is known about, but they've chosen to keep it like this for now so that it doesn't break on older versions of Docker. But this is a real show stopper, so to get around it you can comment out the slow separate chown command and add it as an option to the previous copy command instead as follows: docker-compose.yml[edit]docker-compose.yml is the file that determines what services will be included in the instances and their versions and data locations. This file should be backed up in case a full rebuild of your Mastodon instance is required at some point. There is nothing private in this file so you can store it in your configuration repo or wiki etc. Before running any docker-compose commands we need to edit the docker-compose.yml file. Change all the images to use the version of the repo you chose above, e.g. "image: tootsuite/mastodon:v2.5.2". Note also that there are three services that use the mastodon container, web, streaming and sidekiq - all need to have the version added. You may want to enable the elastic search section too. I like to change the restart options from "always" to "unless-stopped" as well. Uncomment all the volume path lines for data persistence. By default the host part (the path before the colon) of each is just a relative path which means that the data will end up residing in directories within the docker repo directory. We've decided to use a separate mastodon-data directory instead to keep the data separate from the main codebase, so the relative paths need to changed to the absolute paths we set above (/var/www/domains/mastodon-data). But, since the data is in another location, a symlink needs to be created in the repo public directory pointing to the public public/system directory in the data directory, since the web-server's document_root will be pointing at the repo's public directory which contains all the web files. .env.production[edit].env.production is the file that represents your Mastodon instance's basic configuration such as domain, secret keys, database and SMTP connections and other services. This file is best backed up (at least the non-reproducible parts such as the secrets and keys) in a secure location as it is needed if you ever need to do a complete rebuild of your mastodon instance. Copy the .env.production.sample to .env.production and run the setup wizard. Note that most of the questions can be just set as default by entering nothing. Answer "yes" to save the configuration, create the schema and admin user etc. For the email configuration, it's best to run through the live tests, because the settings can be very temperamental. I've found that localhost doesn't work and an actual external domain that resolves to the SMTP server is needed. No login details are needed though because the mail server still sees that the request is local and allows it to be sent without credentials or TLS. The configuration that will be used is output to the screen as well, and I've found that it's best to copy this so that if there's any problems you can manually put these into the .env.production and then run docker-compose build to make the changes take effect. You can also re-run the setup script by deleting all the persistent data from mastodon-data to create a fresh install, and then building again. Note: There's a step that runs the chown -R command over the whole mastodon directory structure which for some reason takes a very long time to run, just let it be and it eventually ends. Start the instance[edit]Then if all has gone well, you can now run the main Mastodon instance with docker-compose up -d which should give something like the following. To stop the instance use docker-compose down. Note that the docker-compose commands must be run from within the mastodon-docker directory.

Connecting with Nginx[edit]Now that we have a running Mastodon instance in a container, we need to connect it to our web-server outside the container. This simply involves creating an appropriate server block to connect request to our Mastodon domain to the ports exposed by the containers. I'm basing my server block on this page of the official documentation, see also this excellent guide by Dave Lane (but note that CSP and XSS headers are added by Mastodon automatically now). Theming[edit]The basics of adjusting themes is shown here, but these instructions only cover how to make adjustments to the default theme. We're running the mastodon-light theme, so in our case we created an app/javascript/styles/organicdesign.scss containing our new CSS rules and change variables etc. The variable settings from your existing theme go at the top before your custom variable changes, and the other CSS files your parent theme originally included go at the bottom after your variable changes, check the Github repo to see what your original theme includes. You can also see all the variables used by your theme and the common application variables in the repo. You may also want to add your own custom CSS rules which can go before or after the original CSS includes as required. Here's a short example, our full scss file is here. For these changes to take effect, you also need to edit the config/themes.yml file and change the mastodon-light entry to point to your newly created custom scss file instead of the original one. After you've made your changes, you'll need to stop the system, rebuild it and bring it up again. Images: One slightly annoying thing about the way the Mastodon skin is done is that it uses a lot of img elements instead of using div elements with background images. But you can actually make an image invisible and change it to show only a css background image as shown here by Marcel Shields. Using the API[edit]Mastodon comes with a web API so that bots and other apps can interact with instances and their content and users. The main documentation is here. Some calls are publicly available, but others such as posting a status require an access key. Although there is a workflow for creating via the API, the easiest way is to just log in to the account (or create one) manually, then go to the "development" item in the accounts settings and add an application. Clicking on the newly created application in the list shows a Client Key, a Client Secret and the API Access Token. Administration[edit]The first thing to do is add a cronjob to remove old (default 7 days) remote media from the local cache because it grows huge over time:

Upgrade[edit]See the upgrading section of the documentation. It basically just involves updating the repo and checking out the latest tag while preserving your docker-compose.yml settings (but changing the image versions in it). Then run the db:migrate task too in case any changes have been made to the database schema. Note: I like to manually back up the changed files (check with git status what's changed, but mainly docker-compose.yml and Dockerfile are the important ones to get right), then do a git reset --hard then fetch and checkout the new tag. You can then compare the new config files with the old ones and migrate the necessary changes across. This is better than simple stashing and popping because, the configs can undergo a lot of change from version to version. Backup[edit]The mastodon-data directory holds everything needed for a backup, but it's important to back up the postgresql database with a proper dump as well since the files can become corrupted and unusable. The redis database is also very important, but a dump is automatically maintained in the redis/dump.rdb file in the data directory and is safe to use for live backup, see this post about backing up and restoring redis databases. Both databases should be backed up at least daily, but the rest of the files can be backed up less regularly. Note: To backup the directories, it's probably best to do a docker-compose stop and the a start after, and you'll need to stop everything but start in the postgresql in order to to a db restore. Tips & Tricks[edit]

Related Articles[edit]

Fediverse bots[edit]

Mastodon tools & resources[edit]

Mastodon plugins[edit]

See also[edit]

|

LimeSurvey[edit] |

| Posted by Nad on 24 September 2018 at 17:58 |

|---|

This post has the following tags: Libre software

|

LimeSurvey is a PHP application aimed at creating and managing surveys and their result sets. It's a great open source replacement for Google Forms which is nothing more than yet another sinister way Google uses to get access to more of people's private information.

Creating surveys[edit]Surveys can be created or existing ones modified from the main page. Surveys are divided into two main aspects in LimeSurvey, the settings and the structure which are available the main tabs on the left sidebar of the survey creation screen. Settings are where the form is given a title, description, welcome and thank you message etc. Structure is the actual content of the survey in the form of groups of questions where each group is effectively a separate page of questions in the final survey. Each question by default expects just a plain text answer, but there are many other answer types available such as numerical, multi-choice, dropdown lists, checkboxes, dates or even file uploads. You need to add a question-group before you can add any questions. You can add all the groups at once and then start adding the questions or start with the first. You can edit the details of any group or question within a group at any time by clicking on it in the left sidebar when the structure tab is selected. the right panel of the screen is for editing whatever is selected in the left sidebar, and the top bar of the right panel are the buttons related to it for saving the changes etc. When you're editing or creating a question, the main panel on the right is divided into to sides. The left half is the title, description and text of the question itself, and the answer part where the answer type (type of input) is specified. After you've selected the question text and the input type, you save and close the question. If the question type was a list type, you can edit the list options text with the "edit answer options" button on the top bar. The top bar is also where you can preview the question you've just made to see if it looks right, or even preview the whole group or survey. Previews open in another tab. Running surveys[edit]After you're happy with the survey, you go back to the settings tab in the left sidebar and go to the "Publication and access" option where you can specify various final details about the survey such as how long it's available for and whether it's public or restricted. Finally you click "Activate this survey" which locks it from further changes, gives you the link to your survey and makes it available to the participants. You can see the data that's been collected any time from the "Notifications and data" option in the settings tab of the sidebar. You can use the "Export" button in the top bar to export the results as a spreadsheet for final analysis, or to display the results in table format on screen, click the "Responses" dropdown button in the top bar and select "Responses and statistics" then "Display responses" from the top bar. See also[edit] |

Red Matrix[edit] |

| Posted by Nad on 23 June 2013 at 17:11 |

|---|

This post has the following tags: Libre software

|

| Red is kind of like a decentralised social network (along the lines of identi.ca, Friendica, and Diaspora), but they've thrown away the rule book. Red has no concept of "people" or "friends" or "social". Red is a means of creating channels which can communicate with each other and to allow other channels permission to do things (or not). These channels can look like people and they can look like friends and they can be social.

They can also look like a great many other things - forums, groups, clubs, online websites, photo archives and blogs, wikis, corporate and small business websites, etc. They are just channels - with permissions that extend far beyond a single website. You can make them into whatever you wish them to be. You can associate web resources and files to these channels or stick with basic communications. There are no inherent limits. There is no central authority telling you what you can and cannot do. Any filtering that happens is by your choice. Any setting of permissions is your choice and yours alone. You aren't tied to a single hub/website. If your own site gets shut down due to hardware or management issues or political pressure, the communication layer allows you to pop up anywhere on the Internet and resume communicating with your friends, by inserting a thumb drive containing your vital identity details or importing your account from another server. Your resources can be access controlled to allow or deny any person or group you wish - and these permissions work across the Red network no matter what provider hosts the actual content. Red "magic-auth" allows anybody from any Red site to be identified before allowing them to see your private photos, files, web-pages, profiles, conversations, whatever. To do this, you only login once to your own home hub. Everything else is, well - magic. Red is free and open source and provided by volunteers who believe in freedom and despise corporations which think that privacy extortion is a business model. The name is derived from Spanish "la red" - e.g. "the network". Our installation[edit]We have a test installation running at red.organicdesign.co.nz. The installation process was a simple standard LAMP application installation, just git clone the code-base and add a few rewrite rules as follows - they recommmend using their .htaccess, but we have this functionality disbaled and added the following rules to our global Apache configuration instead. When I first installed it, the installer pointed out a number of environment options that needed fixing which was quite straight forward, but for some reason the application wouldn't run so I left it for a couple of months and then did a git pull to update the code-base and tried again. Something must have been fixed, because now our test installation is up and running :-) Path bug[edit]For some reason our installation had a problem whereby any pages in sub-categories such as /profile/foo or help/intro wouldn't load their CSS or JS as the base-url setting would become a sub-directory instead of the root of the domain. I isolated this to the setting of $path on line 634 of boot.php which was basing its value on $_SERVER['SCRIPT_NAME'] to test if the installation was running in a sub-directory. I think perhaps it should be testing $_SERVER['SCRIPT_FILENAME'] instead but I'm not sure. I've contacted the lead developer with this info and just forced $path to an empty string for our installation since we're not in a sub-directory. Articles about Red[edit]

See also[edit]

|

ContentsNextcloud[edit] |

| Posted by Nad on 18 February 2018 at 00:06 |

|---|

This post has the following tags: Libre software

|

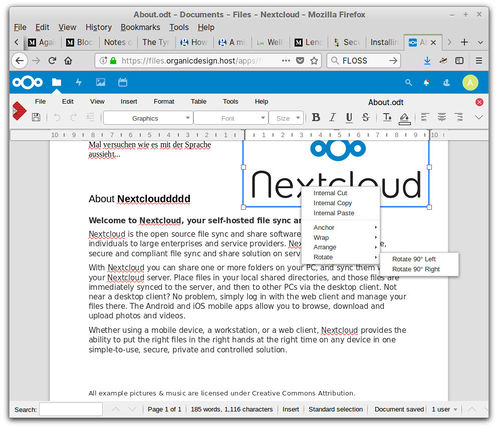

Nextcloud is a kind of personal Dropbox solution which is completely free and open source. There are many add-on applications available such as calendar, tasks, chat and collaborative file editing.

Installation[edit]This is our procedure for installing Nextcloud and LibreOffice Online on a Debian-based server. LibreOffice has included a component to allow it to be served over HTTP since version 5.3 but to use it you need to integrate it with a cloud file system that supports it. Nextcloud which is a brilliant groupware suite in its own right supports LibreOffice Online and integrates perfectly with it.I'm following the instructions created by Collabora and Nextcloud from here, and more specifically the Nginx variation here. I'm documenting here my specific configuration to include the LetsEncrypt and other specific configuration aspects that are out of the scope of those instructions so that we have a more easily reproducible procedure. I'm using the office.organicdesign.host domain here which you'll need to change for your own purposes. Set up the server[edit]Bring the machine up to date and install the following dependencies. Configure the web-server and SSL certificates[edit]In the Nginx configuration for this site, add a basic block for handling non-HTTP requests as follows. This will allow the LetsEncrypt domain validation requests to pass, but all other requests will be bounced to their respective HTTPS counterparts.

Install and configure Nextcloud[edit]Nextcloud is a "drop-box" style web-application which is completely open-source so you can install it on your own server, and it has built-in integration for working with LibreOffice Online. Nextcloud is PHP so first download the source and unpack it into /var/www/nextcloud, ensure it's accessible by www-data, and then add the Nginx configuration recommended here. In this configuration we need to adjust the domain names and delete the port 80 block since we have an existing one described above to handle LetsEncrypt domain validation requests. Also remove the SSL lines and replace them with an include of the nginx.ssl.conf we made above. A database and user will need to be created and then you can run through the install by going to the files.organicdesign.host domain. After you've successfully installed Nextcloud, go to the admin updater to check for any problems or optimisations and upgrade to the latest stable version.

Install LibreOffice Online[edit]We now need to add a reverse-proxy block into our Nginx configuration, you can use the block from the instructions here (see below for Docker instructions). Adjust the server_name parameter to the domain you're using and replace the SSL directives with an include of the nginx.ssl.conf we created above. Note: If you're using the same domain for both Nextcloud and LOOL, you need to put the LOOL Nginx server block contents inside the Nextcloud block, see this for example. From the Docker image[edit]By far the simplest method is to use Docker. Run through the Docker installation for details, which basically involves simply pulling and running it with the following syntax. Using the Debian package[edit]Although using Docker is by far the simplest method, Docker is heavy and you may prefer to install loolwsd from a native Debian package to reduce dependencies and have it running in the native environment. Most of this is just taken directly from the start script in the Docker image source.

Finishing up[edit]Now you can enable the Collabora Online application in your Nextcloud from settings/apps and then go to Collabora Online in the administration section of settings and set the URL of your application to https://office.organicdesign.host. Now you should be ready to testing out creating and editing some office documents in our files! Mail: You can configure and test you site's mailout settings from settings/administration/additional settings. Set your email to something external to the server first so that the test messages will be a proper test, since sometimes you may have settings that work for local addresses but not for external ones. For example, our server setup does work for external addresses if the encrypted and authenticated SMTP is used since that only works for connections coming in from the outside, since the Nextcloud is on the same host, unencrypted SMTP connections on port 25 should be used. Registration: After that, you may want to install the registration add-on so that users can register themselves, you can configure it from the same place as the mail and define a default group and whether the accounts need admin approval - if so, the account is initially disabled and needs to be enabled by an admin. Upgrading[edit]Upgrading NextCloud can be easily done by simply following the instructions in the site when an upgrade is due. But it can be a good idea to opt to use the CLI updater when prompted. To do this open a shell and navigate to the Nextcloud installation location, the check for upgrades:

You should repeat the process of checking for upgrades after this as sometimes, if the installation is quite old, upgrading leads to new potential upgrades.

If there are any problems, try running it again, it may have removed a problem extension and succeed on the second run. After successfully running the upgrade, turn the maintenance mode off: To upgrade Colabora, simply stop the Docker container (and be sure to stop any other containers using port 9980), pull the collabora/code repo, and if a new image version was retrieved, delete the old container and image, and start the new one with the docker run command shown above in the installation. For some reason it sometimes takes an hour or so before you can start editing documents with it in Nextcloud, so if its not working after upgrade, come back to it after a couple of hours and check then. Changing domain name[edit]To change the domain name of a Nextcloud installation which is running Collabora there are five places that need to be updated:

Developing a custom add-on[edit]I'd like to make an add-on which caters for some of the things we need to do but that we've not found available within the current selection of addons. The idea is to be able to create custom record types and instances of those records. Creating a new record type would involve defining a list of fields and their data-types (which in turn requires a list of such types and their input/output contexts) and the design of a form for creating or modifying instances of those records.

Troubleshooting[edit]Check in the "office" item in the "administration" sidebar of your Nextcloud's "settings". It tells you there if the server-side Collabora is accessible. You can also manually check the info response from /hosting/capabilities. Tips & Tricks[edit]

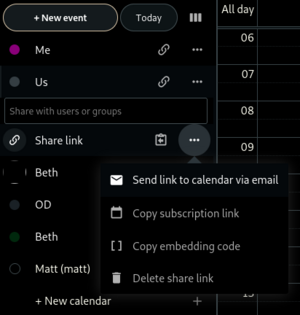

Changing default preferences[edit]The online version of Libre Office has a very simplified user interface with very few adjustable options, but since it's using the actual Libre Office code internally those options can still be set from within the server configuration (this method does not work if you're running LOOL from the Docker image). The configuration file containing the preferences is /etc/coolwsd/coolkitconfig.xcu which is a very simplified version of the desktop Libre Office configuration file usually found in ~/.config/libreoffice/4/user/registrymodifications.xcu. We can find the preferences we want to adjust in the desktop version of the file and copy those same entries across to the LOOL version. Note that if you're running the docker container rather than a native installation, you can edit files in the container using the docker cp command, e.g. For example, I wanted to disable the auto-correct functionality that fixed capitalisation of the first two letters of words. In the desktop version when I disable this functionality from the interface the following entry in the configuration is updated: I then added this rows into the /etc/coolwsd/coolkitconfig.xcu file and after restarting the service, LOOL recognised the new preference and disabled the functionality properly. Using cell protection[edit]You may have noticed in the spreadsheet that the "cell protection" tab is still available in the "format cells" dialog box, but you can use it because the "protect sheet" option is not available in the "tools" menu. You can however download your sheet, and enable the cell protection using the offline version of Libre Office, and then upload it again and then you'll find that the cell protection works as it should. To modify or un-protect a a protected cell, you'll need to go back to the offline version though. Custom CSS[edit]I like to enable the "Custom CSS" app which gives you the ability to add your own CSS rules in the theming section of settings. Here's my custom rules: Sharing a Nextcloud calendar with Google calendar[edit]The intuitive way of sharing a Nextcloud calendar using the Webdav link does not work with Google calendar (or Thunderbird for that matter). But generating an export link using the following procedure works in both cases, although Google is extremely slow to synchronise external calendars, taking 1-2 days.

The link will now be in the clipboard ready for pasting. This link is not the usual Dav link and the data that is returned by it is acceptable to Google and Thunderbird calendars.

To use the link to retrieve your calendar into a Google calendar:

Errors and issues[edit]Memcache \OC\Memcache\APCu not available[edit]This error started showing up for the execution of cron.php. It can be mitigated by enabling the APCu for the call by adding --define apc.enable_cli=1 to php command in the crontab. See also[edit]

|

Diaspora[edit] |

| Posted by Nad on 1 January 2011 at 10:12 |

|---|

This post has the following tags: Libre software

|

| Diaspora lets you sort your connections into groups called aspects. Unique to Diaspora, aspects ensure that your photos, stories and jokes are shared only with the people you intend.

You own your pictures, and you shouldn’t have to give that up just to share them. You maintain ownership of everything you share on Diaspora, giving you full control over how it's distributed. Diaspora makes sharing clean and easy – and this goes for privacy too. Inherently private, Diaspora doesn’t make you wade through pages of settings and options just to keep your profile secure. See also[edit]

|

ContentsOpenCobalt[edit] |

| Posted by Nad on 13 July 2008 at 06:34 |

|---|

This post has the following tags: Libre software

|

| The Croquet Project is an international effort to promote the continued development of Croquet, a free software platform and a P2P network operating system for developing and delivering deeply collaborative multi-user online applications. Croquet was specifically designed to enable the creation and low-cost deployment of large scale metaverses.

Implemented in Squeak (An open source community developed implementation of Smalltalk), Croquet features a network architecture that supports communication, collaboration, resource sharing, and synchronous computation among multiple users. It also provides a flexible framework in which most user interface concepts can be rapidly prototyped and deployed to create powerful and highly collaborative multi-user 2D and 3D applications and simulations. Applications created with the Croquet Software Developer's Kit (SDK) can be used to support highly scalable collaborative data visualization, virtual learning and problem solving environments, 3D wikis, online gaming environments (MMORPGs), and privately maintained/interconnected multiuser virtual environments. Cobalt[edit]Cobalt (download page) is an emerging multi-institutional community software development effort to deploy an open source production-grade metaverse browser/toolkit application built using the Croquet SDK. Cobalt was made available under the Croquet license as a pre-alpha build in March 2008. Open Cobalt virtual machine[edit]The Open Cobalt virtual machine is essentially the same as the Squeak virtual machine. It acts as an interface between Squeak code and the microprocessor. Its written in Slang, a functional subset of Smalltalk that can be translated into standard C. Squeak uses the C language as a cross-platform equivalent of assembly language. Since Slang is a subset of Smalltalk, the Squeak virtual machine can be edited and debugged by running it in Squeak itself. The virtual machine can also be extended with plug-ins that are written in either C or Slang. These are used in Squeak for such things as playing media files and for supporting Squeak's built in public key encryption abilities. Squeak[edit][Squeak [more...]] Our plans for a 3D environment[edit]We think the ultimate interface is the Geoscope and Croquet perfectly fits the requirements that the Geoscope idea has for a 3D environment, such as OO, Prototype-based, P2P, Self-contained, changeable from within etc. To begin using a new environment in our system, we must first incorporate the installation of it into our packages. next we must develop a means of having the textual content available from both the wiki and 3D environment. Also having snapshots of the 3D environment maintained in the wiki would be good. Running on Ubuntu[edit]I downloaded the source from the download page, unpacked it and ran the Croquet.sh file from a shell window (so I would see any output messages). First it failed to find the audio asking for OpenAL which I downloaded source for, unpacked, configured and make, make install'd. It still failed to find Audio. Second when I drag the home page (cobalt ball) or any other demo onto the workspace, it freezes saying "waiting for connection" forever. The shell shows a message saying that libuuid.so.1 was not found, but this library is definitely installed.

To check out[edit]

See also[edit]

|

ContentsDistributed TiddlyWiki[edit] | ||||||||

| Posted by Infomaniac on 17 November 2011 at 03:15 | ||||||||

|---|---|---|---|---|---|---|---|---|

This post has the following tags: Libre software

| ||||||||

Frustrated with the progress of *Diaspora, an idea kept nagging at me: why can't a TiddlyWiki be distributed via Bit Torrent? I've been wanting to start a blog that is serverless and I thought there must be a way using existing technologies. The foundation of my blog would be some implementation of TiddlyWiki, probably mGSD (previously known as MonkeyGTD or D3 ("D-Cubed"), which I tried before and found to be quite impressive. TiddlyWiki is essentially an index html page (it uses the Single Page Application architecture) that is loaded with ingenious javaScripts that load flat datafiles, called tiddlers. As I understand it, every time a new page is created, it is stored in a new tiddler, and every time a page is edited, a new tiddler file is created that, rather than replacing it, supersedes the previous version. (The application only needs create access, not modify or delete). Thus, each page has a version history. It is the index, or base file and its collection of tiddlers that need to be distributed. Distribution[edit]GitHub?[edit]My first idea was to distribute this collection of files using Github, which nicely deals with files added to the collection. But one must have a Github account and set up crypto keys to use it - not really easy for the marginally-technical person. Worse, after I upload or sync my files to GitHub, in order for anyone to read my wonderful blog/wiki, they too must set up a GitHub account, which is really expecting too much for the average reader. Not only that, but this wikiblog will only be found by search engines as a project directory on GitHub, with no easy way for someone to discover what it's all about. According to Git is the next Unix:

Github offers gists, issues, and wiki, though it's not clear that these are part of the DRCS (they don't seem to ever be copied to the local file system with the source), maybe they are stored only on the website. In any case, Git can be used as a distributed datastore for a wiki. Some people have already thought of the idea: Git-wiki[edit]is a wiki that relies on git to keep pages' history and Sinatra (a DSL Ruby for quickly creating web applications in Ruby with minimal effort) to serve them.

Cappuccino Github Issues[edit]On Cappuccino demos is a project called GitHub Issues :

BitTorrent[edit]Far more people know how to use a bittorent client - at least for downloading - than know how to use GitHub. A very small subset of bittorrent users know how to create a torrent file and upload it to a tracker on a torrent index site. Not only that, but trackers and torrent files are quickly being replaced by trackerless sites using magnet links, and many bittorrent clients now can distribute and search for magnet links on a DHT (and for the Vuze client, a special messaging network called PEX. The advantage of magnet links, is that there is no .torrent file to download; it is simply a URL specifying the hash of the file and its title. Indexing sites that use magnet links don't have to store .torrent files and use less bandwidth. Even better, magnet links can be stored in the Mainline DHT, and all major bittorent clients can search the distributed database directly, without the need for an indexing site like TPB. Their only downside is that the links are long and ugly, and not easy to read or share. The average web surfer or blogger does not understand all this, and the amount of maintenance to run a site this way is significant. And merely making a torrent file available does not a site make. Unless one knows where to look, readers will never find it, never download the torrent, and never read the site. Also, since a torrent is a static file that describes static content, it is not possible to add new tiddlers to an existing torrent - this changes the hash of the file collection, and necessitates creating a whole new torrent, and somehow revoking the old version. This problem also applies to magnet links, since they are a hash of the entire distribution, not its individual files. This means that it would be necessary to create a new .torrent file, or magnet link for every single tiddler, and somehow associate it with the rest of the dynamic distribution (which is not a challenge for GitHub) -- perhaps using a common namespace in the magnet links sent to the DHT. But using a bittorrent client won't do, the reader would continually have to find a way to download the newest tiddlers to keep his mirror of the site up-to-date. There has to be an easier way. To begin with, what is needed is browser integration with Bittorrent. Vuze[edit]Vuze (formerly known as Azureus) is essentially a bitttorent client with a built-in browser. I don't know the specifics of its data structure, but it is essentially a multi-page site whose content indexes videos and their metadata, and then efficiently loads videos (and apparently pre-fetches previews on the current content page). Vuze by default uses an incompatible DHT but a plugin is available that integrates it with the Mainline DHT. It also can use magnet links. However, Vuze is proprietary, and although it is possible to upload videos, it is not clear (to me) how one goes about creating html content for the Vuze network, if that is even possible. But the concept shows that it is possible to create a distributed site, or at least, distributed video content that is indexed in the DHT, with existing technology. Opera[edit]Opera is another proprietary browser known for its speed - almost as fast as google chrome. It's innovative in a quirky sort of way, for example, it has desktop widgets that are familiar to Mac and Windows 7 users. It does not support all the nifty plugins and extensions people have come to expect on Firefox, but the more important ones, like NotScript are available, and there are many cool extensions, for example Facebook integration and real-time site translation. Opera also includes a mail client. Lack of integrated email support is a common complaint for TiddlyWiki users using it for GTD. Another nicety: online bookmark syncronising, accessible from anywhere. Version 9 introduced an bittorent client. At first, most people laughed at the idea, unable to imagine any possible use for a built-in bittorrent client. But downloading a torrent really is transparent and hassle-free for those who are still bittorrent newbies. It is even possible to integrate Opera with Transmission. Opera also can use magnet links, although some websurfing is often required to get it to work. It also claims to be faster and use less memory for pages that are heavy on javaScript - a plus for our javaScript tiddlyWiki. Lastly, Opera Unite is a built-in personal webserver, that offers the user the capability to host content, share files, composite and share photo albums, live messenger chat and webcam, and even stream your media library. Unite includes a community proxy that forwards your subdomain to your Unite server or use a dynamic DNS plugin. Pretty attractive and powerful, if you don't mind the proprietary technology. At the end of the day, Opera has almost everything needed to create a personal, self-hosted site, and seamlessly download bittorent files. It seems easy enough to host a tiddlyWiki using Unite, but this is not distributed; it depends on Opera's proxy service. What is missing is a way to manage new tiddlers, calculate their hashes, create magnet links, and upload them into the Mainline DHT. Opera apparently has no mechanism to create new torrents at all.

If Unite-tracker works with the current version of Opera, this is almost all the nuts-and-bolts needed to close the upload-download cycle of distributed web content, as long as an accessible page (primarily, the base index of the tiddlywiki) provides a link that references the index generated by the tracker. However, Opera, at the end of the day, is proprietary. What is needed are open source solutions that can be maintained and improved... Firefox Plugins[edit]Magnet Catcher identifies torrent links on a page and automatically creates and adds a magnet link next to the torrent link. Simply click the magnet icon to get the magnet link. Magnet Catcher also does away with the need to click on a torrent description in a search result page in order to download the torrent. (Apparently it fetches the metadata from the torrent file in the background). The magnet links are displayed directly on the browse pages next to the torrent titles. Magnet Catcher works on almost any web site. Resources and Addons To Make BitTorrent Magnet Life Easier

All of the above plugins, available for Firefox, suggest that it is currently possible to create a distributed wiki with very little modification and integration of code. Bittorrent Streaming - the future[edit]Sneak peak: BitTorrent expands live streaming tests - BitTorrent Live is a whole new P2P protocol to distribute live streamed data across the internet without the need for infrastructure, and with a minimum of latency. The inventor's efforts included writing a complete new P2P protocol from scratch. The BitTorrent protocol itself, he said, simply introduced too much latency to be a viable live streaming solution. The tests have so far (as of Oct 2011) been restricted to “simple pre-recorded content loops to test latency and audio/visual sync.” I tried it, and either it didn't work on Ubuntu, or I did not know how to use it properly. That's because there is no documentation. This is likely to be commercial closed-source. Still, it's interesting Mechanism for updating tiddler index via RSS subscription[edit]

As a publisher it takes a source of content (either a local file or a webpage) and makes a copy of this available as a torrent. It also makes a descriptor to this available for you to share with other people so they can subscribe to the content and get their own copy of it. The descriptor includes a public key so that when subscribers download the content they are safe in assuming that it came from the publisher. The plugin periodically scans the shared resource and will make a newer copy available to subscribers if it changes. As a subscriber it takes a publication descriptor (for example a magnet link to the publish descriptor created by the publisher) and periodically downloads the latest content associated with it. It makes the content available via a local http port so the subscriber can easily consume it.

Tribler released a beta of its Bittorrent client with a new feature that the researchers behind the project have dubbed P2P moderation. The idea in a nutshell is that users can aggregate channels and content and distribute them through DHT. From the official announcement: "In Tribler V5.2 every user can start their own "Channel" to publish torrents. When people like your torrents you become popular and essentially become the owner of an Internet TV channel. You can moderate this RSS-like stream of torrents. This feature is designed to stop the flow of spam in P2P bittorrent, without the requirement of any server." Channels can be pre-populated with an existing RSS feed, or personally aggregated by manually adding torrent files. The client lists a number of popular channels and also offers the option to search for channels. However, the search seems to be restricted to the actual channel name, which makes it impossible to find a channel by searching for the content you're looking for. Users also can't add any description, tags or artwork to their channels. Add to this the fact that I didn't even find an easy way to rename your channel, and you'll see why this is still a pretty experimental feature. The idea itself of course isn't really new: The original eDonkey client already included the ability to publish collections of files, and Vuze users have been able to publish distributed feeds through the Distributed Database Trusted Feed plug-in and the RSS Feed Generator plug-in since 2008. Nodewiki[edit]Nodewiki is wiki software created using Node.js. It uses redis for data storage, with the redis node client to talk to redis. Page markup is written using Showdown, a Markdown implementation written in javascript.

fanout.js[edit]fanout.js - a simple and robust fanout pubsub messaging server for node.js See also[edit]

Vuze[edit] |

ContentsTransmission[edit] |

| Posted by Nad on 10 September 2008 at 11:35 |

|---|

This post has the following tags: Libre software

|

Transmission is the default P2P torrent downloading application that comes with Ubuntu.

Starting and stopping on schedule[edit]To run transmission from the crontab (/etc/crontab), it must be associated with a display as in the following example. The first line starts transmission at 2 am, and the second stops it at 8 am. Edit the crontab with sudo nano /etc/crontab, and add the following lines (replace username with your Linux username). Now press CTRL + O and then hit return to save your changes to the file. Quit option removed[edit]The reason the --quit option has been removed is because the preferred method of running transmission in the background is as a daemon. See their wiki for more information. Running on a server[edit]transmission-daemon is a daemon-based Transmission session that can be controlled via RPC commands from transmission's web interface or transmission-remote. transmission-remote is a remote control utility for Transmission and transmission-daemon. By default, transmission-remote connects to the Transmission session at localhost:9091. Other sessions can be controlled by specifying a different host and/or port. See also[edit] |

Tonika[edit] |

| Posted by Infomaniac on 26 June 2011 at 16:13 |

|---|

This post has the following tags: Libre software

|

| Organic security: A (digital) social network, which (by design) restricts direct communication to pairs of users who are friends, possesses many of the security properties (privacy, anonymity, deniability, resilience to denial-of-service attacks, etc.) that human sociaties implement organically in daily life. This is the only known decentralized network design that allows open membership while being robust against a long list of distributed network attacks. We call a digital system with such design an organic network and the security that it attains for its users — organic security. Organic networks are extremely desirable in the current Internet climate, however they are hard to realize because they lack long-distance calling. Tonika resolves just this issue.

Long-distance calling: At its core, Tonika is a routing algorithm for organic networks that implements long-distance calling: establishing indirect communication between non-friend users. Tonika is robust (low-latency, high-throuhgput connectivity is achieved in the presence of significant link failures), incentive-friendly (nodes work on behalf of others as much as other work for them), efficient (the effective global throughput is close to optimal for the network's bandwidth and topology constraints) and real-time concurrent (all of the above are achieved in a low-latency, real-time manner in the presence of millions of communicating parties). Some application areas: Internet (bandwidth) neutrality. Freedom, no-censoring and no-bias of speech on the Internet. Scalable open Internet access in all countries. User ownership of data and history in social applications. Cooperative cloud computing without administration. Etc. Robust: more robust than most other p2p networks by having a strong defence against the and can form a strong defence against the Sybil attack Installing on Debian/Ubuntu[edit]Haven't been able to install it so far - needs to be compiled from source, and it's written in the obscure "GO" language which is not noob-friendly. I installed GO from these instructions which resulted in a fatal error, but seemed to pass the execution test anyway so I carried on with the Tonika installation by checking it out from source and following the instructions in its README file. It gave many errors with path and environment variable problems, I tried fixed the first few and then decided it's too alpha to get into just yet and bailed. External links[edit]

See also[edit] |