blog

ContentsScuttlebutt[edit] | |||

| Posted by Nad on 28 June 2018 at 21:29 | |||

|---|---|---|---|

This post has the following tags: Libre software

| |||

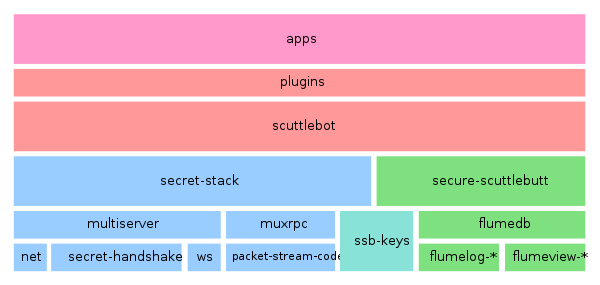

Secure Scuttlebutt (SSB) is a peer-to-peer append-only messaging system on top of which a variety networking applications can be built such as the Patchwork social network. Scuttlebutt is able to operate offline and then sync when in the presence of another peer making it perfect for meshing environments, and even supports synchronisation via removable media to work as a "sneakernet". The word "scuttlebutt" is the equivalent of "watercooler" in an office, but applies to boats and ships.

Pubs[edit]Pubs are permenantly connected peers in the Scuttlebutt network with the job of routing messages and storing them while recipients are offline. The name "pub" was used since they play a similar role to pubs in villages. Once you have scuttlebot running (see next section) you can configure it to be a pub simply by creating invites to it. see how to set up a pub for more detail. A list of some of the pubs can be found here, and I set up ssb.organicdesign.pub to learn more about the process :-) Updating the pub Docker image[edit]First pull the latest images: Then if an upgrade happened, first get the list of currently running containers and their images with docker ps, stop the current sbot container with docker stop <comtainer-id>, delete the container with docker rm <container-id> then delete the old image that container was using with docker rmi <image-id>. Then you can start the new image using the docker run command shown below, or using your create-sbot script which contains that command. Scuttlebot[edit]The easiest way to use Scuttlebutt is by running an end-user application like the Patchwork desktop client, or the Manyverse Android client, which will come pre-packaged with the necessary dependencies. But to run it on a server you need to install the scuttlebot (sbot) service. If you want safely to run it on an exiting server and avoid version conflicts or other dependency issues, you can use the Docker image as described here. The default port that the pub will run on is 8008. To run it natively you can install the latest version of nodejs or use an existing version if you have one. sbot commands[edit]If you're running the Docker image, you can't call the 'sbot daemon directly, you call it as follows instead (you may want to also add a cpu limit as the container can get quite hungry, the following adds an option limiting it to 25%): To start it: Note: it's a good idea to put this command into a create-sbot script so you can run it easily after upgrades etc. To be able to call the sbot command, you can create a script to call the Docker image with the passed arguments, e.g.

Note: To allow a web page to get invitations when sbot is in a Docker container, the page must NOT have the -it (interactive terminal) part in the command.

Give your pub a name: See more on updating profiles such as adding an image here, note that you can also add a --description field.

Posts:

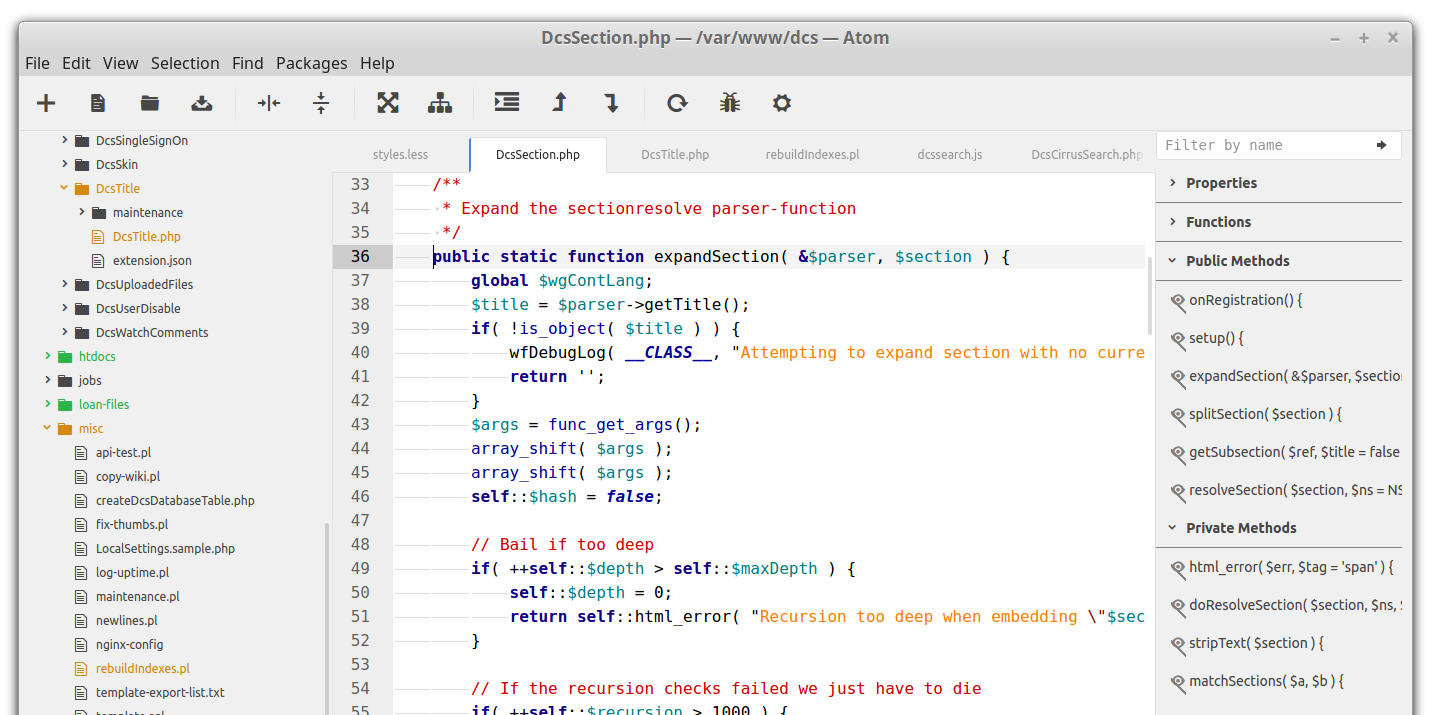

Plugins[edit]Scuttlebot is extendable via a plugins model. Plugins are managed using the plugins commands in a running scuttlebot, e.g. to add the capability to send private encrypted messages, use the following: JavaScript[edit]To access the full functionality of sbot, commands need to be called from JavaScript. This is simply a matter of requiring the ssb-client, for example to send a private message (which can't be done from the command-line) you can use the following basic script which resides in the scuttlebot directory: sbot troubleshooting[edit]Node user: The Docker container seems to require some hard-wired paths involving the "node" UNIX account. I installed it in my own home directory as myself, and things kept failing due to missing files in /home/node, so I just made a symlink from /home/node to my own user dir and then things worked. Calling sbot from web pages: Again this only affects Docker users, the recommended command to call sbot shown in the installation guide uses the -it (interactive terminal and TTY) options, but these will only work when you are running it from a shell and need to be removed if calling it from a web page (or a background task or something). Dealing with problem plugins: You might install a plugin (with sbot plugins.install <PLUGIN>), but it turns out to have errors on startup which means the container can't start so you can't call sbot again to remove the plugin! You can edit the plugins manually since they are persistent data outside of the container which is transient. Plugins are listed in the config file in the root of the ssb data directory. You can nuke the container, remove the plugin from the list or set it to false, then run a new container. Pruning the blobs directory: The main space consumed is the blobs, which are just a non-essential cache of files, these can be pruned by size or age without causing any problems. old-files-purge.pl is a script that allows the recursive nuking of files in a directory structure older than a specified age. Differences between Scuttlebutt and...[edit][edit]Retroshare has the features all inclusive in the application, requires explicit key sharing between people in order to exchange data, does not use rumor message passing, and does not use pubs as an on-ramp (or slip road) to the network. This was a few years ago, but the process of creating and sharing your key with another person in order to share things with them was far more difficult than using SSB. Also according to the outdated wiki, Retroshare does not support IPv6. Projects based on ssb protocol[edit]

Backup and restore[edit]The most important file to back up in order to preserve your identity is Scuttlebutt is the secret file which is the private key corresponding to your public ID. But with this alone it's difficult to rejoin the network from scratch, for example if you try rejoining via a pub you'll find that after a few hours of synchronisation, all of your own historical feed and follows are missing. A more reliable way is to back up both the secret and the conn.json file, this should retrieve all the information. You can use a conn.json from the past or even one from one of your contacts and that should be sufficient to get you connected back into your social graph where you can retrieve all your information as people come and go offline. Protocol[edit]The documentation for the SSB protocol is very clear and easy to understand. There is also the handbook for more information if needed. Reference formats:

Messages in a feed form an append only log, each entry refers to the previous (with the first pointing to null) by it's id. The id of a message is just a hash of a message including the signature. Since the messages and blobs are referenced by ID they are immutable. Message ID's[edit]Computing message ID's can be difficult to start with so here are some examples:

You can compute a message id in JavaScript like this: You can compute a message id in python like this: Extending[edit]The easiest way to make an application on scuttlebutt is to use ssb-client. If you want to extend the functionality you can publish messages of types that aren't used - e.g. the chess game uses the type "chess_invite". All of these types are stored in your feed. If you want to write to another feed you need to make another key pair.

You can also extend patchbay to add your own page, see the readme on the repo for more info. See also[edit] |

Contents

Git[edit] |

| Posted by Nad on 23 June 2008 at 08:24 |

|---|

This post has the following tags: Libre software

|

| Git is a distributed revision control and software code management project created by Linus Torvalds, initially for the Linux kernel development. Git's design was inspired by BitKeeper and Monotone. Git was originally designed only as a low-level engine that others could use to write front ends such as Cogito or StGIT. However, the core Git project has since become a complete revision control system that is usable directly. Several high-profile software projects now use Git for revision control, most notably the Linux kernel, X.org Server, One Laptop per Child (OLPC) core development, and the Ruby on Rails web framework.

Git differs from systems such as CVS, or Subversion in that the database is maintained beside the working filesystem on peers. Each peer is easily sync'ed to any other by using push/pull or fetch. In subversion you use three main directory structures; the trunk, branch and tag. In git the trunk is equivalent to HEAD, and is a sha1sum to the latest commit. Branches are used to fork development from the HEAD if for example bug fixing is required. A tag in git is just a named sha1sum commit which is effectively a static reference to a particular snapshot of code. If a repository is cloned, git tracks the master which is the latest commit on the remote repository, as well as the origin/master which is the last known commit from the source sourced repository. This is updated if your remote changes are pushed back to the repository you cloned from. Pull requests[edit]The normal workflow for contributing to open source projects is to fork the project's repo, make your own changes locally, commit and push them and then submit a pull request. This is done from your own repo's page in Github or Gitlab, and adds your request to the original "upstream" repo where one of the developers with commit access can review the request and merge it if they're happy with it, or add comments to recommend further changes etc. It's best to create a new branch and then do your pull request from within the branch for a couple of reasons. First after you've done a pull request, if you then make subsequent commits, they will automatically be included in the pull request. This is very useful if you realise other changes need to be made that you forgot about, or to commit new changes during conversation with the upstream devs. The second issue is that you may want to make several different pull requests that are in the same repo, but are concerning different features or aspects of the code. By creating a branch specifically for each pull request, you can do as many pull requests as you like while keeping them compartmentalised, and remaining free to work as you like on the master branch. Reverting to a specific commit[edit]What I want is to have a kind of revert functionality similar to the wiki where you want to take the repo back to a particular state marked by a commit ID, and then make a new commit noting that you're reverting. Note that I'm not talking about winding the repo back because that would remove all the subsequent commits, and I'm not talking about branching off at the old commit. The following syntax creates a new commit that takes us back to the state at SHA1. Reverting local changes[edit]To revert local changes back to the local repo's last commit, you can use: You can also add a SHA after that to revert further back. To revert just specific files in the working copy to the last commit: No parameter will show you the differences, or you can specify a file or wildcard files etc. General workflow for a development branch[edit]First create the new branch and push it to the repo: Work on either branch, and occasionally you'll want to bring various updates such as bug-fixes to master in to the dev branch (use origin/master if you want to refer to the local version of master). If you have a conflict, git add it and manually edit the file before committing the change. Eventually, the new branch will be good to go and need to be merged back in with master. We don't want to lose our commit history, so we do a rebase first as follows (the i switch allows you to see before hand what commits will be added). There will likely be some conflict, you can either skip those commits (and deal with those files manually later) by running git rebase --skip each time, or git add the file and manually edit out the conflict. After it's all done, commit the merge and push the changes! Finally, to delete the branch locally then remotely: Add a new tag[edit]Make a new "master" repo from a clone[edit]Get the clone URL from within a repo[edit]You can fine the details about a repo's remote origin using the following command: If the repo is corrupted you may need to use this: Updating just a single file[edit]Sometimes you want to bring only a single file in a working copy up to date and leave everything else as it is. Showing local changes[edit]To show the changes between the working directory and the index. This shows what has been changed, but is not staged for a commit. git diff

git diff --cached

git diff HEAD Nuking a local branch and replacing with a completely different one from the origin[edit]This example nukes the local master branch and then replaces it with the one in the remote origin which has completely replaced the original one. This assumes we're on a different branch than master to start with. GitHub Webhooks[edit]Github offers notifications via Webhooks so that services can respond dynamically to events occurring on their repositories. We use this to have some clones on the server automatically update whenever a push occurs. Here's an example PHP script that responds to Github notifications that are in JSON format (the default) with the optional secret used to validate the request. Note that the web-server must have permission to execute git pull on the repo in question. GitLab Webhooks[edit]GitLab also offer web-hooks as part of their integration system. It's very similar to Github, but the PHP script would look more like this instead: Note: I've extracted the repo name from url rather than using name because the project name can be different than the repo name. Hosting your own Git repo[edit]Hosting your own Git repo is much simpler than with Subversion because every clone of the repo is complete and can be used as the source for all the other clones to push to. Nothing needs to be set up or configured for existing Unix accounts to connecting to Git repositories over secure shell, simple clone a repository using the following syntax: If you'd like to restrict access to the users connecting to the repo to only be able to perform git operations, the you can add the following to their authorized_keys file: Setting up a web-based viewer is also a useful feature when serving your own repos, the best one I've found is Gitlist (not to be confused with Gitalist which is so fat that you'll probably need to wait an hour for it to download its dependencies and compile! I don't even want to know what the configuration process is like!). Gitlist is PHP-based, so you just unpack it into your web-space and set up the appropriate rewrite rules etc as you would for any other webapp like Wordpress or MediaWiki. It has a very basic configuration file which you point at the directory containing the repos you want to view, and specify any in there that you'd like to be hidden from view, and that's it! You can check our installation of Gitlist out at code.organicdesign.co.nz. A simple way to have some repos hidden, except if a password is supplied in the URL is to add the following snippet into index.php after the $config object has been defined. Note that this isn't very secure, especially if you don't force SSL connections, but if you just want to have repos that aren't generally accessible but are easy to provide access to if desired then this is a good method. Note also that the hidden repos config value is a regular expression not just a path. Automatically updating working-copies[edit]It's often useful to have a remote repository automatically update whenever it's pushed to. There's a lot of sites around that explain how to do this, but they all refer to a post-update script that no longer exists. I found this site which has a local copy of the script, and I also added a copy to our tools repo here in case that one disappears as well. All you need to do is save that script into your remote repo's .git/hooks directory with the file name post-update, make sure it's executable, and set the repository's receive.denyCurrentBranch to ignore, so you don't get error messages every time you push to it. And if ownership or mode may need to be changed in the files without being considered as changes the set this too, To have this update further notify other servers so that they can also update their working copies or perform other actions (similar to GitHub's webhooks solution) you can simply add wget commands after the wc_update function call toward the end of the post-update script. The git-update script on the target server can then check the password and perform a sudo git pull using the same procedure as shown above for the GitHub's webhooks. If the update call will always be coming from the same server, then rather than use a password, the script can just check the REMOTE_ADDR instead. Separating a sub-directory out to it's own repository[edit]Often it's useful to make a single directory within a repository into it's own new repository, for example a client may wish to begin hosting their own repository, or after migrating from Subversion a lot of directories that made sense being bundled together into a single repo are no longer practical together (because Subversion allows checking out of individual paths, but Git does not). The git filter-branch command has an option called subdirectory-filter which is exactly for this purpose. First make a copy or new clone of the repository that contains the directory in question, then run the command as follows: You'll see if you look at the files both present and in past commits, or do a git log --oneline that only the files and commits that apply to the sub-directory now exist. But if you check the size of the repo you'll notice that it's actually more than doubled in size! So we need to do a bit of cleaning up to get rid of all the old data completely. To create this as a new repo for example in Gitlab, first create a new empty project, then add the new origin and push the stripped repo to it: Changing history with Git[edit]Removing items from history[edit]A couple of circumstances that require this are for example when you accidentally committed a large binary or something in the past that you'd like to get rid of to reduce the size of the repo, or when you want to split off some part of a repo into another repo and remove all evidence of it from the current repo. There are many posts around showing you how to do this with the filter-index and filter-tree options of git filter-branch, and while these options succeed in removing all the files as they should, if you do look at the commit history there are still many commits in the list that have nothing to do with the remaining files! I haven't figured out what's going on here, yet so to thoroughly remove file and all their associated commits, I see no other option than moving everything you want to keep into it's own directory (using filter-branch because mv will destroy the history) and then using the subdirectory-filter option shown above to make a new repository out of this directory, and then moving the items back to where you want them. For example here we remove everything except the items listed in the file keep.txt. We do it by making a tmp directory and moving everything we want to keep into it and then using the subdirectory-filter method to retain only that tmp directory's contents. First create the temporary directory and move all items throughout history into it using xargs, note that this assumes you've already made your keep.txt file list and it's in the repo dir's parent. Then tidy everything up, Run the sub-directory filter making the tmp directory the new repo root, And finally tidy up again. You can check that it's worked by checking out various commits and looking at the files, and checking the commit log for words in comments you know should only be associated with the files that have been removed, for example I removed an auction site from my private development repo after the owners wanted to start hosting their own code and so I checked the log for "bid" and "offer". Inserting commits into the past[edit]One interesting thing I had to do on a Git repository, which could me required again, was to insert commits into the history. I had migrated a site over to Github that had previously been worked on directly over FTP. After migrating the site to Github and working on it for a week or so, I discovered that many of the directories had a lot of backup versions of files with the date it was backed up in the filename. Obviously it would be much more organised if those backup files could actually be inserted into the revision history of their associated file. So here's a general procedure for how to insert revisions of a file into history. Here's an example repository containing a file called foo.txt which has three revisions. I want to place two extra revisions before the "B state" revision, so first lets wind the head back to the commit before that, Now we add our two new commits (which I've commented as A.1 and A.2), and we add an extra commit that puts the file back to the same state it was before the new commits (d6f8638), that way we avoid having to deal with conflicts in the next step. Then check the log again to see what we have. Then we wind the head back to the top, which is now a detached fork, so we make it into a branch called tmp so we can refer to it more easily. This tmp branch is connected at the first commit since that's where we went back to and forked by making new commits there, so we can use rebase to disconnect it from there and connect it to the new end of the master branch. Now let's see what we've got: That's just what we wanted, the original three commits with our extra two inserted in. The only problem left to fix up is that we're in the tmp branch, the master's head is still at A.2. So all we need to do to tidy up is merge the tmp branch into master and delete it. We still need to get these changes to the remote though, and it won't let you push until after you pull first to merge the remote and local versions together. So either pull then push and enter a comment for the merge commit, or just force the local state onto the origin. Git frameworks & tools[edit]

See also[edit]

|

|

| Posted by Nad on 25 April 2017 at 21:07 |

|---|

This post has the following tags: Libre software

|

ContentsLAMP[edit] |

| Posted by Cyrusty on 18 November 2016 at 09:55 |

|---|

This post has the following tags: Libre software

|

LAMP stands for Linux, Apache, MySQL, PHP and refers to the "technology stack" that's used by the most popular web applications such as Wordpress, Drupal, Joomla!, MediaWiki and GNU social to name a few.

Linux[edit]We normally use Debian on our servers, but Ubuntu is also used on some too. On our desktops and notebooks we use Linux Mint. Ubuntu and Linux Mint are both based on Debian, so the instructions for installations and configurations apply to all of three without any changes. Apache (web-server)[edit]Apache is the most popular web-server, but these days Nginx is becoming very competitive with it. Nginx is more efficient than Apache and is the web-server we prefer to use in our installations. Nginx (pronounced engine x) is one of the preferred services that acts as a web-server, without a web-server the browser wouldn't get any response to its requests for my webpages. A web-server's job is to listen for requests from browsers and deliver the page they ask for. To see if Nginx is installed and running on my local machine I can access it on my browser by typing in the domain name localhost or the IP for it which is 127.0.0.1. If I go to either of those in my browser then I'll see if my computer has a site running on it. I can stop and start nginx by typing in the following shell command MySQL (database-server)[edit]MySQL is a free open source database server, but a few years back it was bought out by Oracle. While it's still open source, the main developers didn't like the direction Oracle were taking it in, so they split off to make their own version called MariaDB which is the database server we use at Organic Design and recommend for projects we're setting up for others. PHP[edit]PHP is the programming language that LAMP applications are written in. It runs on the server, but it's also a good idea to get familiar with JavaScript which runs on the user's side in the browser and all web applications these days use extensively. Resources[edit]See also[edit] |

GNU social[edit] |

| Posted by Nad on 12 November 2016 at 19:27 |

|---|

This post has the following tags: Libre software

|

| == Installation ==

I found installation to be fairly straight forward, it's a basic LAMP system that requires a database to be created prior that can be referred to in the installation procedure. Simple clone the repo of their source code in your web space and then browser to the install.php script. For friendly URLs use a rule such as the following: For some reason after the installation procedure had finished, the link it gave me to the site included a /social at the start of the path which shouldn't have been there. I noticed that it had given the values "social" to $config['site']['path'] so I set it to an empty string and then things mostly worked. But still many things such as avatars and email confirmation still included this incorrect prefix. I was able to fix some instances of the problem by editing some paths in the admin/paths screen, but still some instances of this problem persisted, so in the end I had to add a rewrite rule in the web-server configuration: I raise an issue about this problem, but I don't have much hope for this being dealt with any time soon, because a couple of other minor issues I had had already been raised - over a year ago! Some other recommended settings in config.php to make things more responsive are as follows: You then need to start the background daemons as follows: And you should also have this run on reboot by adding the following to you crontab: Remote connections[edit]For some reason remote follows don't work when I use the email form of my ID, but if I use the URL form it works fine. Connecting to Twitter[edit]There are many plugins available for GNU social, most are shipped with the system but are not enabled by default. The TwitterBridge allows you to sign in with Twitter and to have your GNU social posts go onto your Twitter stream and your Twitter contact's posts show in your GNU social stream. First enable the application in your config.php as follows: To get your consumer key and secret, you need to add GNU social as a new app in Twitter from https://apps.twitter.com/app/new. The name for your app needs to be globally unique, e.g. "OrganicDesign GNU social connection", and this is also used where it says YOUR_APP in the GNU social config shown above. Set the URL to the base URL of your site and the callback to URL/twitter/authorization. You'll need to have your Twitter account connected to your mobile to add an app - just one more reason for leaving! Then in GNU social, go to the Twitter menu item in the admin settings and click on the "connect my twitter account" link, and then make sure the app name in the same as the name you used for the app you created in Twitter. And then in your user settings, go to the Twitter menu and set the "Automatically send my notices to Twitter" and "Subscribe to my Twitter friends here" options. You then need to restart the daemons to add the new ones to the background jobs: Plugins[edit]The plugin system is very intuitive. Simple make a new directory of the name of your new plugin in the plugins directory, and have a file of the same name followed by Plugin.php. The PHP file must contain a class of the name followed by Plugin which extends the Plugin class. You then subscribe your extension to various events by defining methods in your class of the event names prefixed by on. There is a sample extension in the plugins directory, as well as developer documentation and a list of all the available events. I made this simple extension which adds a new CSS so we can customise our GNU social without rebuilding the entire theme. See also the community plugins list, community development guide and the official code docs. Issues[edit]400 error when posting: This seems to be a session expiry issue and the post will work if you refresh the page and post again. See also[edit]

|

Tox[edit] |

| Posted by Nad on 25 October 2014 at 19:42 |

|---|

This post has the following tags: Libre software

|

|

Installation on Linux Mint[edit]They have an apt repository that works for Debian and it's many variants such as Linux Mint and Ubuntu. Tox DNS[edit]People's ID's in the Tox system are really long hashes like a bitcoin address which are impossible to remember, but they have some centralised DNS servers that you can enter name and address pairs into so that people can refer to users by name instead. The current servers are utox.org and toxme.se. See also[edit]

|

ContentsJQuery[edit] |

| Posted by Nad on 11 November 2009 at 02:41 |

|---|

This post has the following tags: Libre software

|

| jQuery is a cross-browser JavaScript library designed to simplify the client-side scripting of HTML. It was released in January 2006 at BarCamp NYC by John Resig. Used by over 55% of the 10,000 most visited websites, jQuery is the most popular JavaScript library in use today.

jQuery is free, open source software, dual-licensed under the MIT License or the GPL v2. jQuery's syntax is designed to make it easier to navigate a document, select DOM elements, create animations, handle events, and develop Ajax applications. jQuery also provides capabilities for developers to create plug-ins on top of the JavaScript library. This enables developers to create abstractions for low-level interaction and animation, advanced effects and high-level, theme-able widgets. The modular approach to the jQuery library allows the creation of powerful dynamic web pages and web applications. jQuery plugins and libraries powered by jQuery[edit]

jQuery File Upload[edit]jFormer - jQuery Form Framework[edit]jFormer is a form framework written on top of jQuery that allows you to quickly generate beautiful, standards compliant forms. Leveraging the latest techniques in web design, jFormer helps you create web forms that:

CorMVC - jQuery-powered Model-View-Controller Framework[edit]CorMVC is a jQuery-powered Model-View-Controller (MVC) framework that can aide in the development of single-page, web-based applications. CorMVC stands for client-only-required model-view-controller and is designed to be lowest possible entry point to learning about single-page application architecture. It does not presuppose any server-side technologies, or a web server of any kind, and requires no more than a web browser to get up and running. It evolved out of the author's (Ben Nadel) recent presentation, Building Single-Page Applications Using jQuery And ColdFusion, and will continue to evolve as he thinks more deeply about this type of application architecture. We're building an experimental nodal interface to make a start on the unified ontology using corMVC.

Sorting lists[edit]Here's an excellent compact method of sorting list items based on any kind of criteria that uses jQuery without any other plugins. In the example below, the lists on the pages are sorted according to the anchor text in a link contained in the LI item. See also[edit]

|

ContentsJoomla![edit] |

| Posted by Nad on 1 November 2010 at 22:05 |

|---|

This post has the following tags: Libre software

|

| Joomla is a free and open source content management system (CMS) for publishing content on the Web and intranets. It comprises a model–view–controller (MVC) Web application framework that can also be used independently.

Joomla is written in Object-oriented PHP, and uses software design patterns, stores data in a MySQL, and includes features such as page caching, RSS feeds, printable versions of pages, news flashes, blogs, polls, search, and support for language internationalisation (i18n). Within its first year of release, Joomla was downloaded 2.5 million times. Over 6,000 free and commercial plug-ins are available. Installation[edit]I found installation to be very straight forward, simply unpack the downloadable zip into a web-accessible location on the server making sure that it is writeable by the web-server, then navigate to that location to go through the install procedure. It allows the use of a table prefix so that it can be installed into existing MySQL databases along with other applications such as Wordpress or MediaWiki. A useful feature of the Joomla installation is that it allows the newly set up site to be optionally populated with sample data to help Joomla-noobs get started. Skinning[edit]todo... (removed old links, need new ones) Extensions[edit]See extensions.joomla.org for a comprehensive extension search tool. They have thousands of useful extension that are very well organised and reviewed, many of them with live demos. Some extensions to look in to[edit]

Documentation[edit]One problem with Joomla is that the documentation is very good for users, but very sparse for developers. Most of the API documentation is empty and still waiting to be populated by developer contributions. I found that for writing my first extensions I needed to wade through the Joomla code to figure out how to do things.

Technical issues[edit]I made my first Joomla extension on August 2015 for the Ligmincha Brasil site. It allows single sign-on between the Joomla and the volunteers MediaWiki. Any users of the wiki who are in the specified MediaWiki group ("Joomla" by default) are automatically able to access the administration side of the Joomla if they are logged in to the wiki. I had some trouble at first because one of the plugin events I wanted to use, onAfterInitialise, was not being called, but the other, onUserAthenticate, was. It turned out (after I asked a question on stackoverflow) that the events are only triggered for plugins in their corresponding group, so if you want a plugin that uses events in different groups, you need to make your extension into a package of multiple plugins. It turned out after I got the onAfterInitialise event to trigger in my code (by making it a system plugin) that I didn't even need to use the onUserAthenticate event at all because I could call JFactory::getSession()->set directly which means that user authentication isn't required. The code is in our Git repo here. See also[edit]

|

ContentsWordpress[edit] |

| Posted by Nad on 9 May 2010 at 22:57 |

|---|

This post has the following tags: Libre software

|

WordPress is a free and open source blogging tool and a content management system (CMS) based on PHP and MySQL. It has many features including a plug-in architecture and a template system. WordPress is used by over 14.7% of Alexa Internet's "top 1 million" websites and as of August 2011 manages 22% of all new websites. WordPress is currently the most popular blogging system in use on the web, powering over 60 million websites worldwide. It was first released on May 27, 2003, by founders Matt Mullenweg and Mike Little as a fork of b2/cafelog. As of April 2013, version 3.5 had been downloaded over 18 million times.

Using MediaWiki accounts on Wordpress[edit]We needed to have a Wordpress site which is seamlessly connected to a MediaWiki so that users can register and login using the MediaWiki login and registration process only. The Wordpress login/logout/registration links needed to direct users to the equivalent MediaWiki pages for those operations. If the user was already logged in, then they need to also be automatically logged in as the same user on Wordpress, and conversely, if the user is not logged in to MediaWiki then they should also not be logged in to the Wordpress. This consists of two small scripts, Wordpress.php which is a small MediaWiki extension that provides an Ajax callback for getting user info for a passed user ID and token, and Wordpress-wp.php which goes at the end of the Wordpress wp-config.php file and checks the MediaWiki cookies to see if a user is logged in and if so checks via the MediaWiki Ajax callback if the cookie info is valid and if so creates and/or logs in the equivalent Wordpress user. How it works[edit]The idea is that the Wordpress checks on initialisation if there are any cookies present that represent a logged in user for the associated wiki - specifically a UserID and a Token cookie. The Token cookie is a unique character sequence which only exists if the user is currently logged in, and only lasts as long as the session after which time it's replaced by a new one. If the Wordpress does not find any such cookies, it logs any currently logged in Wordpress user out and returns to allow normal anonymous browsing of the site. If however a MediaWiki user ID and Token are found, then the code calls the MediaWiki Ajax handler (Wordpress::user) with these values (note that this request is not an Ajax request as it's being made by the Wordpress server-side PHP, not the client-side JavaScript like usual). The Ajax handler will check if the passed token matches the token for that user ID in the database, and if it does it returns the details for the user; their username, email address and a password to be used internally by the Wordpress (not the users MediaWiki password - the password is not really needed since normal Wordpress logins are disabled). If the Ajax handler does not return any valid user then the cookie values were invalid and the result is the same as if there were no cookie values at all; i.e. any currently logged in Wordpress user is logged out, and the function returns allowing normal anonymous browsing of the Wordpress site. If valid user information is returned, then first the Wordpress checks if it has an existing user of that name and if not it creates one using the information returned by the Ajax handler. It then makes either the existing user of that name or the newly created one the current user, logging out any currently logged in user if it was a different one first. And that's it for the Wordpress side. The MediaWiki side consists only of the Ajax handler. An Ajax handler was used even though its not a true Ajax request because it's much lighter than processing a full MediaWiki page request. The handler simply receives a UserID and Token, creates a User object from the ID and checks if the User token matches the passed token to ensure there's no forgery going on. If there's a match it returns the information for the user in JSON format, or an empty JSON object if either there's not a match or the request did not derive from the local host. Issues[edit]This method requires that both the wiki and the Wordpress are running under the same domain so that the Wordpress is able to access the wikis cookies. If they were under different domains, then a client-side Ajax request to the wiki domain would need to be added to obtain the cookies. Another minor issue is that for the returnto feature to work (allowing the browser to redirect back to the Wordpress after login, logout or registration) the following conditions must be met:

If any of these are not the case, then the default code will not allow the returnto feature to work properly, but the code can be customised pretty easily to work with your setup, for example a simple redirect could be added into the wiki's LocalSettings.php. Internationalisation (i18n)[edit]Wordpress doesn't by default come with multilingual support, but it does have an internationalisation mechanism and many user-contributed translations of the interface messages in .mo files. I've installed the Wordpress Language plugin which allows for easy switching of language with a setting for the admin dashboard and the public site. The single-signon solution above can be integrated with the wordpress internationalisation by passing the MediaWiki user's language selection along with the other info passed back by the Ajax handler, and then the Wordpress-wp.php script must set the WPLANG constant to this value. One issue with this is that the WPLANG constant needs to be set prior to the inclusion of wp-settings.php, but all the calls to wordpress functions such as get_current_user_id() need to be called after the inclusion of wp-settings.php. But the $mwuser variable is required by both, so the script needs to be split into two parts. This can be done either by containing it all in a class and making $mwuser into a static class variable, or by making $mwuser a global. The plugin does not come prepackaged with all the .mo files though (although there is a pay version that does), so the admin user needs to change to each of the languages manually from the dashboard to download the files if automatic language selection is wanted. The languages are not actually downloaded as files, the data is inserted directly into the wp_icl_strings and wp_icl_string_translations database tables. Skinning[edit]

Useful extensions[edit]

Online shop extensions[edit]

Resources[edit]See also[edit]

|

Contents

Drupal[edit] | |||

| Posted by Nad on 1 November 2010 at 22:13 | |||

|---|---|---|---|

This post has the following tags: Libre software

| |||

| Drupal is a free and open source content management system (CMS) written in PHP and distributed under the GNU General Public License. It is used as a back-end system for at least 1% of all websites worldwide ranging from personal blogs to larger corporate and political sites including whitehouse.gov and data.gov.uk. It is also used for knowledge management and business collaboration.

The standard release of Drupal, known as Drupal core, contains basic features common to most CMSs. These include user account registration and maintenance, menu management, RSS-feeds, page layout customization, and system administration. The Drupal core installation can be used as a brochureware website, a single- or multi-user blog, an Internet forum, or a community website providing for user-generated content. Over 6000 (as of October 2010) free community-contributed addons, known as contrib modules, are available to alter and extend Drupal's core capabilities and add new features or customize Drupal's behavior and appearance. Because of this plug-in extensibility and modular design, Drupal is sometimes described as a content management framework. Drupal is also described as a web application framework, as it meets the generally accepted feature requirements for such frameworks. Although Drupal offers a sophisticated API for developers, no programming skills are required for basic website installation and administration. Installation[edit]Installation was straight forward and allowed me to install it into the existing database alongside MediaWiki, Joomla and Wordpress using a table prefix. Structure[edit]From the Drupal overview: If you want to go deeper with Drupal, you should understand how information flows between the system's layers. There are five main layers to consider:

User, Permission, Role[edit]Every visitor to your site, whether they have an account and log in or visit the site anonymously, is considered a user to Drupal. Each user has a numeric user ID, and non-anonymous users also have a user name and an email address. Other information can also be associated with users by modules; for instance, if you use the core Profile module, you can define user profile fields to be associated with each user. Anonymous users have a user ID of zero (0). The user with user ID one (1), which is the user account you create when you install Drupal, is special: that user has permission to do absolutely everything on the site. Other users on your site can be assigned permissions via roles. To do this, you first need to create a role, which you might call "Content editor" or "Member". Next, you will assign permissions to that role, to tell Drupal what that role can and can't do on the site. Finally, you will grant certain users on your site your new role, which will mean that when those users are logged in, Drupal will let them do the actions you gave that role permission to do. You can also assign permissions for the special built-in roles of "anonymous user" (a user who is not logged in) and "authenticated user" (a user who is logged in, with no special role assignments). Drupal permissions are quite flexible -- you are allowed to assign permission for any task to any role, depending on the needs of your site. Admin user and role[edit]Modules[edit]Modules and themes are installed by downloading and unpacking them into sites/all/modules or sites/all/themes or to a domain-specific location such as /sites/foo.bar/modules. Note that modules and themes should always be installed under the sites structure, never directly to the modules/themes directories in the codebase root. To check if the module is installed properly, Go to the admin/build/modules page to enable/disable modules and ensure dependencies are satisfied, and also go to admin/reports/status to see if there are any problems or issues to attend to. Drupal modules on OD[edit]We have our modules for all Drupal sites shared in /var/www/drupal6_modules and then symlinked from each of the codebases. Here's a list of the main modules we're using.

Some parts of these modules are incorporated in the core of Drupal 7, which is now released. Modules to check out[edit]

Views[edit]The Views module provides a flexible method for Drupal site designers to control how lists and tables of content (nodes in Views 1, almost anything in Views 2) are presented. Traditionally, Drupal has hard-coded most of this, particularly in how taxonomy and tracker lists are formatted. This tool is essentially a smart query builder that, given enough information, can build the proper query, execute it, and display the results. It has four modes, plus a special mode, and provides an impressive amount of functionality from these modes. Among other things, Views can be used to generate reports, create summaries, and display collections of images and other content. CKEditor[edit]You can replace textarea fields in the page editor with a visual editor to make it easier for users. CK is an improved version of the old FCK. This is a two stage process:

Instructions are in in the module's documentation. You have to remember to switch off CK if you want to include HTML or PHP in your page. Organic Groups[edit]Organic Groups (OG) enables users to create and manage their own 'groups'. Each group can have subscribers, and maintains a group home page where subscribers communicate amongst themselves. They do so by posting the usual node types: blog, story, page, etc. A block is shown on the group home page which facilitates these posts. The block also provides summary information about the group. Groups may be selective or not. Selective groups require approval in order to become a member, or even invitation-only groups. There are lots of preferences to configure groups as you need. Groups get their own theme, language, taxonomy, and so on. Integrates well and depends upon Views module

Setting up Organic Groups[edit]After initially enabling all the required modules, a message comes up saying You must designate at least one content type to act as a group node and another as a group post. Create new content type if needed. The group node is a content type and is one "page" but it is actually a page that gets turned into the "set up the organic group" page once the configuration is done. You can think of the group node as the front door to your group's pages. It is the form you fill out to create a group. The other part of the warning message regarding a group post type is saying that none of the existing content types are able to be posted into a group, they are set by default to May not be posted into a group. New content types can be created that can be or existing types can be adjusted to be postable into groups. We intend to use Organic Groups as an overall ontology containing everything in the site, so we adjust the existing content types rather than creating new ones. To make groups usable, group related blocks must be added to the site, go to admin/site building/blocks to see the available blocks and add them to the various areas of the site. Note that some of these blocks only show up in specific contexts. Adding a forum to a group[edit]First create the forums and tell them not to be promoted to the front page since they'll be in their respective groups instead. Next, the authenticated users must be given permission to manipulate forum topics, either all or just their own. And also permission in the node module to access content. Note: Forms have an inherent hierarchy which means that the Organic Groups module is redundant if forums are the only type being used in the group. The hierarchy is Containers → Categories ("forums") → Topics → Comments. Adding members to closed groups[edit]To add members to a closed group, first go to the groups administration page from administration/groups and then the group specific block becomes visible, and you can see the number of current members as a link. Clicking this link takes you to the groups users page which has an option to add members. Automatically Create a Forum when you create a Group[edit]Download and install OG Forums. Go to admin/og/og_forum and click on "Update old groups" to have forum containers and default forums created for existing groups. Leave all the options on default unless you want to change them for a particular reason, then save. For further info go to the documentation. Don't forget to update the permissions for each group to create new forum discussions or it will not be allowed. Security[edit]Like MediaWiki, Drupal doesn't come with the ability to restrict read access out of the box. The most popular method for achieving this appears to be the Taxonomy Access Control module (TAC) for permitting access based on tagging and roles, or the TAC lite module to restrict by individual user. You can also use Node Access User Reference and Node Access Node Reference to create fine-grained hierarchical access control, see the alternative solution to Organic Groups blog entry for more detail. However, the Organic Groups module, discussed above, is more appropriate for sites that are already using it, since it has its own access control mechanism based on the group structure. Flag[edit]Flag (previously known as Views Bookmark) is a flexible flagging system that is completely customizable by the administrator. Using this module, the site administrator can provide any number of flags for nodes, comments, or users. Some possibilities include bookmarks, marking important, friends, or flag as offensive. With extensive views integration, you can create custom lists of popular content or keep tabs on important content. Flags may be per-user, meaning that each user can mark an item individually, or global, meaning that the item is either marked or it is not marked, and any user who changes that changes it for everyone. In this way, additional flags (similar to published and sticky) can be put on nodes, or other items, and dealt with by the system however the administration likes. Rules[edit]Install Rules module if you want to have event-driven behaviour. Make sure rule=making users have permission to view the Rules Admin module. To make a Rule[edit]

Example: Assign Roles to Groups Using Rules[edit]

Spamicide[edit]The spamicide module is designed to prevent bots spamming forms without requiring the user to fill in a CAPTCHA. It doesn't seem to be under active development and has a few problems such as ignoring which forms you've elected to put the protection on and putting it on all of them regardless. It works by adding a new hidden input to the form and then treating submissions as spam if that input is filled in. Unfortunately it seems that many bots in the field recognise this input, probably by the recognisable surrounding div. So I modified the module slightly by making it require the hidden input to be populated with "foobaz" and making the forms onSubmit event populate set the input's value to this. I did this with the following modfications: I added the following snippet after line 288, the first line of the spamicide_form_alter function:

Project management[edit]

Upgrading[edit]The general upgrade procedure is to first put the site down for maintenance in admin/settings/site-maintenance. Then backup the file structure, replace with the latest download, then go to the /update.php script. After this has run, the original sites directory can be put back, and then go to admin/build/modules to see if any modules also require updating. Skinning[edit]Zen is the ultimate starting theme for Drupal 6 and 7. If you are building your own standards-compliant theme, you will find it much easier to start with Zen than to start with Garland or Bluemarine. This theme has fantastic online documentation and tons of code comments for both the PHP (template.php) and HTML (page.tpl.php, node.tpl.php). The idea behind the Zen theme is to have a very flexible standards-compliant and semantically correct XHTML theme that can be highly modified through CSS and an enhanced version of Drupal’s template system. Out of the box, Zen is clean and simple with either a one, two, or three column layout of fixed or liquid width. In addition, the HTML source order has content placed before sidebars or the navbar for increased accessibility and SEO. The name is an homage to the CSS Zen Garden site where designers can redesign the page purely through the use of CSS. Breadcrumbs[edit]Drupal 6 and 7 both put breadcrumbs in the header, this might not be convenient. You can remove or relocate this feature by making .breadcrumb display none in CSS, or by removing or relocating the position of this line in page.tpl.php:Links[edit]

Farming[edit]Drupal is already set up with the farm model in mind. in addition to the global settings, modules and themes in the codebase root, there is also a directory called sites which is used to add additional global or site specific settings, modules and themes. The sites directory contains a directory called "all" and any number of other directories named by domain or domain segment. Clean URLs[edit]Drupal supports clean URLs, but to have them working within our Wikia configuration requires a slightly different configuration than that recommended in the Drupal documentation on the subject. In the global Apache virtual-host container, use the following format. This maps the domain www.example-drupal.com to the drupal code-base in /var/www/domains/example-drupal.

Scalability[edit]In Lynda.com's Drupal Essential Training video series, Tom Geller said:

This has opened up a bit of a can of worms for us as we're involved in a project for which Drupal would have been a very appropriate starting point, but will need to be capable of serving very high traffic. Due to this comment we'd started looking in to using Plone on ZEO to scale, but upon further investigation it seems that Drupal may be ok after all as a number of very high traffic sites such as theonion.com are Drupal based. We need to get hold of some high-traffic Drupal statistics that specifically relate to the number of edits per second, not just the number of concurrent visitors. This is because any CMS can be made to serve up any amount of static content without too much difficulty simply by adding a caching layer that diverts requests for unchanged content to an array of proxy servers. The difficulty comes when the CMS is required to respond to many requests per second which occurs if users need to make a change to the content such as by posting a comment.

NoSQL for Drupal[edit]

Our Settings[edit]When setting up Organic Groups, a decision must be made about whether to create duplicate node types for posts and pages etc that are allowed to be posted into groups (it seems that the two options are mutually exclusive). Our plan for Organic Groups is for it to be our main ontology structure and so all content should be in a group. So for our purposes we can set all node types to be standard or wiki group posts. In Drupal 7 standard vs wiki group posts no longer exists. Things that could be improved[edit]

Drupal 7[edit]OG for Drupal 7[edit]First set up a Drupal 7. Using the explanations in the article http://drupal.org/documentation/install/basic :

Organic groups should now be working. If not, obey the UI's instructions to fix things, they are perfectly clear. (Note at this stage we are using nightly builds of the modules. Some urls will be provided later.) Next add new a content type called Group by navigating: Administer - Structure - Content Types. Make sure under the group tab that it is:

You can then make, say, group Posts by making them (group tab):

Git for Drupal[edit]To get access to Drupal development and contribute or patch modules you need to:

Then follow the Git Instructions tab on the relevant Drupal project page, e.g. this example. Migrating MediaWiki content to Drupal[edit]See also[edit]

|